Top 11 Companies Driving Energy-Efficient AI Chip Innovation in the USA for 2025

The relentless growth of generative AI is forcing a strategic pivot in the semiconductor industry, making performance-per-watt the new critical benchmark for success. As the AI chip market is projected to reach $203.24 billion in 2025, the massive energy consumption of data centers has become a primary bottleneck, shifting the focus from raw computational power to energy-efficient architectures. This shift is supported by significant government backing, such as the $50 billion CHIPS for America Act, and is most evident in the strategic decoupling of AI training and inference workloads. While GPUs still dominate the energy-intensive training market, a more competitive landscape is emerging for inference, where hyperscalers and startups are developing specialized, low-power chips to manage operational costs and environmental impact.

Key Developments in the U.S. AI Chip Market

The U.S. AI chip landscape is defined by the strategic moves of established leaders, hyperscale consumers, and nimble startups, all aiming to capture value by optimizing performance and energy efficiency.

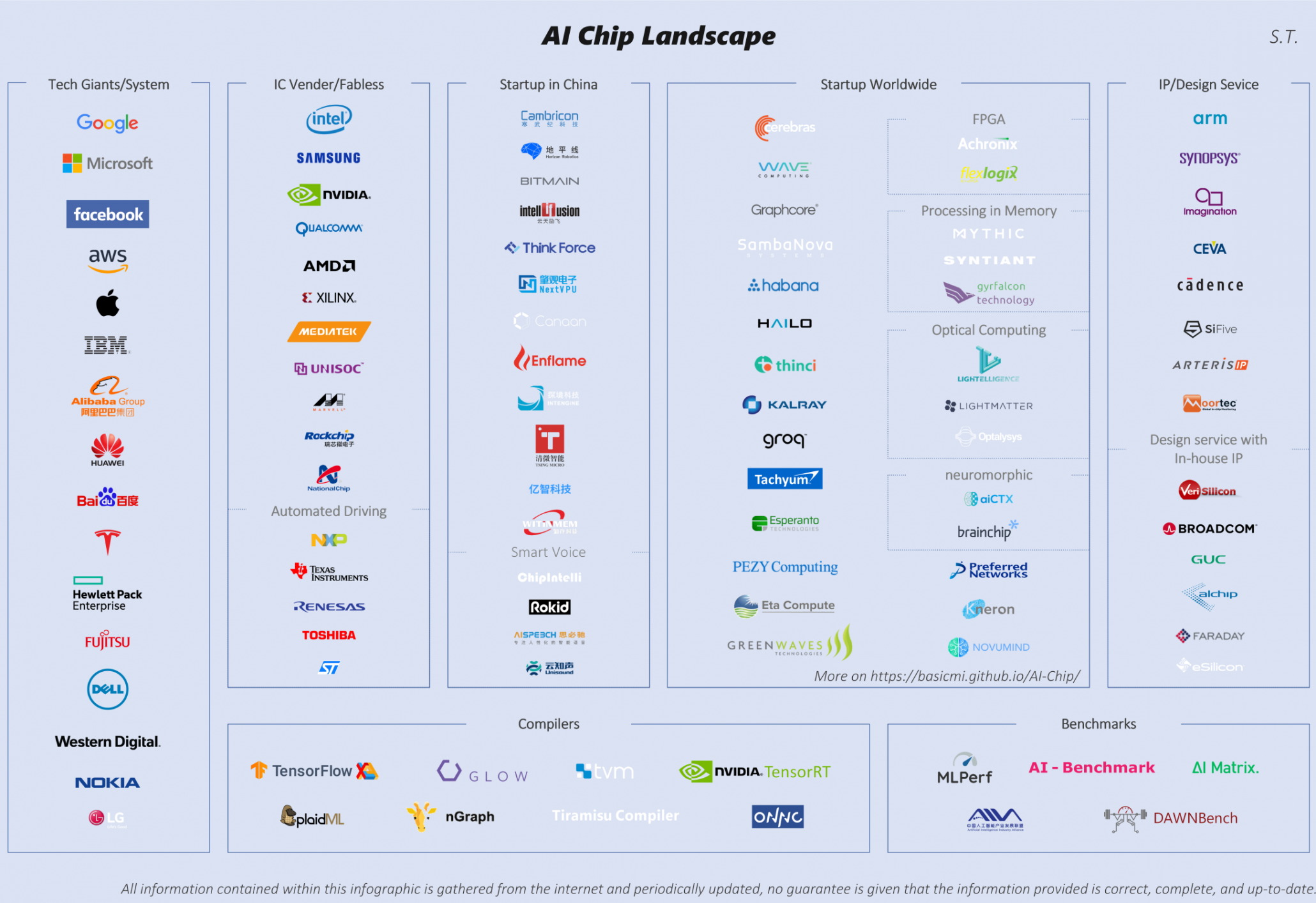

Mapping the Global AI Chip Ecosystem

This infographic visually categorizes the key players in the AI chip market, aligning with the section’s overview of established leaders, hyperscalers, and startups.

(Source: SemiWiki)

1. NVIDIA Corporation: Accelerating the Roadmap

Company: NVIDIA Corporation (NVDA)

Key Development: Despite its dominance in high-performance training GPUs, which command over 80% of the market, NVIDIA announced its next-generation Rubin platform in early 2026. This signals an accelerated one-year product cadence aimed at improving performance and efficiency to maintain its lead. The company’s data center revenue surged to $30.8 billion in Q 3 2025, a 112% year-over-year increase, underscoring the immense demand for its hardware.

Application: Large-scale AI model training, high-performance computing.

Source: NVIDIA Kicks Off the Next Generation of AI With Rubin

2. Advanced Micro Devices, Inc. (AMD): The Primary Challenger

Company: Advanced Micro Devices, Inc. (AMD)

Key Development: AMD has solidified its role as the main competitor to NVIDIA, offering competitive performance at a lower cost. Its MI 300 X GPU is reportedly 20-30% cheaper than comparable NVIDIA chips. The company secured a multi-billion dollar partnership with Open AI and a $1 billion deal with the U.S. Department of Energy to build two AI-powered supercomputers, validating its technology for large-scale, demanding workloads.

Application: AI training and inference, supercomputing.

Source: AMD and Open AI Strike Multi-Billion-Dollar AI Chip Partnership

3. Intel Corporation: Focusing on AI PCs and Inference

Company: Intel Corporation (INTC)

Key Development: Intel is strategically targeting the AI inference market with its Gaudi line of accelerators and the rapidly growing AI PC market. While facing supply constraints, the company is leveraging its integrated device manufacturing (IDM) capabilities and its expanding foundry business to produce advanced, efficient chips in the U.S.

Application: AI inference, on-device AI for PCs.

Source: Intel Q 4 FY 2025: AI PC Ramp Meets Supply Constraints

4. Broadcom Inc.: The Custom Silicon Powerhouse

Company: Broadcom Inc. (AVGO)

Key Development: Broadcom has become a crucial player in custom AI silicon through its partnership with Google to co-develop energy-efficient Tensor Processing Units (TPUs). The company’s expertise in both custom chips and high-speed networking silicon makes it essential for building the infrastructure that connects thousands of AI accelerators, with its semiconductor revenue hitting $8.2 billion driven by AI demand.

Application: Custom AI accelerators (ASICs), data center networking.

Source: Broadcom Surges 11% on Alphabet AI Chip Partnership 2025

5. Qualcomm Inc.: Bringing AI to the Edge

Company: Qualcomm Inc. (QCOM)

Key Development: Traditionally a mobile leader, Qualcomm has entered the data center market with its AI 200 and AI 250 accelerator chips, targeting the less-dominated and more energy-sensitive inference segment. The company is also poised to benefit from the 165% expected jump in AI PC sales in 2025, powered by its efficient Snapdragon chips with on-device AI capabilities.

Application: Data center inference, AI PCs, on-device AI.

Source: Qualcomm announces AI chips to compete with AMD and …

6. Google (Alphabet): Pioneering In-House AI Silicon

Company: Google (Alphabet)

Key Development: As a pioneer in custom ASICs, Google continues to advance its Tensor Processing Unit (TPU) line. These chips are specifically designed and optimized for the company’s machine learning workloads, providing a significant performance-per-watt advantage within the Google Cloud ecosystem and reducing reliance on third-party hardware.

Application: Proprietary AI model training and inference on Google Cloud.

Source: 10 World’s Best AI Chip Companies to Watch in 2025

7. Amazon (AWS): Driving Cloud Efficiency

Company: Amazon (AWS)

Key Development: Amazon develops its own custom silicon, including Trainium for AI training and Inferentia for inference. These chips offer a cost-effective and energy-efficient alternative to GPUs for AWS customers, directly addressing the high operational costs associated with running AI at scale.

Application: AI training and inference within the AWS ecosystem.

Source: An In-Depth Guide to Top 10 AI Chip Companies of 2026

8. Microsoft: Optimizing for a Copilot Future

Company: Microsoft

Key Development: Microsoft has ramped up its custom chip efforts with the launch of its Maia AI accelerator. The second-generation Maia 200, built on TSMC’s 3 nm process, reportedly offers 30% higher performance than competing chips at the same price point. This in-house silicon is designed to power its own Copilot services and Azure infrastructure more efficiently.

Application: Powering proprietary AI services and Azure cloud infrastructure.

Source: Microsoft reveals Maia 200 AI chip, will use it in house

9. Cerebras Systems: Building at Wafer-Scale

Company: Cerebras Systems

Key Development: Known for its massive Wafer-Scale Engine, the world’s largest chip, Cerebras offers a unique architecture designed to train enormous AI models with greater efficiency than clusters of smaller chips. This approach minimizes data movement, a major source of energy consumption.

Application: Training massive, single AI models.

Source: Meet the Startups Taking on Big Tech With Smarter AI Chips

10. Groq: Redefining Inference Speed

Company: Groq

Key Development: This startup has developed a novel Language Processing Unit (LPU) focused exclusively on delivering unparalleled speed and energy efficiency for AI inference. By designing hardware specifically for running models, not training them, Groq targets low-latency applications where GPUs are often inefficient.

Application: Ultra-low-latency AI inference.

Source: Groq is fast, low cost inference.

11. Tenstorrent: Championing Open-Source Architecture

Company: Tenstorrent

Key Development: Led by industry veteran Jim Keller, Tenstorrent is developing high-performance AI chips using the open-source RISC-V architecture. This strategy avoids costly licensing fees and allows for greater design flexibility, enabling the creation of highly customized and potentially more energy-efficient processors.

Application: High-performance AI computing with flexible architecture.

Source: The 10 Hottest Semiconductor Startups Of 2025 (So Far)

Table: Key Developments in the U.S. Energy-Efficient AI Chip Market 2025

| Company | Key Product/Development | Application | Source |

|---|---|---|---|

| NVIDIA Corporation | Rubin platform with one-year cadence | Large-scale AI model training | NVIDIA |

| Advanced Micro Devices, Inc. | MI 300 X GPU and partnerships with Open AI/DOE | AI training and supercomputing | ODSC |

| Intel Corporation | Gaudi accelerators and AI PC chips | AI inference and on-device AI | Futurum Group |

| Broadcom Inc. | Custom TPUs for Google | Custom AI accelerators (ASICs) | Plus 500 |

| Qualcomm Inc. | AI 200/AI 250 chips and Snapdragon processors | Data center inference and AI PCs | CNBC |

| Google (Alphabet) | Advancements in Tensor Processing Unit (TPU) line | Proprietary cloud-based AI workloads | Designveloper |

| Amazon (AWS) | Trainium and Inferentia custom chips | AI training and inference in the AWS cloud | Golden Owl |

| Microsoft | Maia 200 AI accelerator on 3 nm process | Powering Copilot and Azure infrastructure | CNBC |

| Cerebras Systems | Wafer-Scale Engine for massive models | Specialized AI model training | VKTR |

| Groq | Language Processing Unit (LPU) | Ultra-low-latency AI inference | Groq |

| Tenstorrent | RISC-V based AI chips | High-performance, flexible AI computing | CRN |

From Cloud to Edge: Adoption Driven by Efficiency

The diversity of AI chip architectures reflects a market that is rapidly maturing beyond a one-size-fits-all approach. Industry adoption is no longer just about securing high-end GPUs for training. It is now about deploying the most efficient silicon for the specific task. Hyperscalers like Microsoft, Amazon, and Google are leading this trend by developing in-house ASICs like Maia 200 and TPUs. This vertical integration is a direct response to the immense operational costs and energy demands of running services like Copilot, demonstrating that performance-per-watt is a primary driver for hardware design at scale. At the same time, companies like Qualcomm and Intel are driving adoption at the edge with energy-sipping chips for AI PCs, a market expected to grow 165% in 2025. This shows that efficiency is a key consideration across the entire compute spectrum.

Big Tech’s Massive AI Chip Hoard

This chart quantifies the massive AI chip investments by hyperscalers like Google and Microsoft, directly supporting the section’s point about in-house development driving efficiency.

(Source: Voronoi)

The U.S. Epicenter and the TSMC Linchpin

The United States remains the undisputed geographical epicenter of the AI chip revolution. It is home to the dominant fabless design houses (NVIDIA, AMD, Qualcomm), the world’s largest consumers of AI chips (the hyperscalers), and a vibrant ecosystem of startups (Groq, Cerebras). This leadership is being actively reinforced by industrial policy, with the $50 billion CHIPS for America Act incentivizing domestic manufacturing and R&D. However, the landscape has a critical dependency: nearly all of these U.S. companies rely on Taiwan’s TSMC for manufacturing their most advanced chips. By early 2026, NVIDIA is expected to become TSMC’s largest customer, highlighting a symbiotic but geopolitically sensitive relationship that makes TSMC the ultimate power broker in the AI hardware value chain.

AI Leaders Project Major Revenue Growth

This chart highlights the projected growth of US leader NVIDIA and foundry TSMC, illustrating the relationship between the ‘U.S. Epicenter’ and the ‘TSMC Linchpin’ discussed in the text.

(Source: Compounding Your Wealth – Substack)

A Maturing Market of Specialized Solutions

These developments signal a clear maturation of AI hardware. The market is moving away from reliance on a single architecture and toward a diverse ecosystem of specialized solutions. GPUs from NVIDIA and AMD remain the gold standard for the complex task of training, representing a fully mature and scaled technology. In parallel, custom ASICs from hyperscalers have moved from experimental to essential, now serving as the backbone for proprietary AI services where energy efficiency directly impacts profitability. Finally, emerging architectures from startups like Groq and Tenstorrent represent the cutting edge of innovation. Groq’s LPU, focused solely on inference, and Tenstorrent’s use of open-source RISC-V are challenging the status quo by targeting specific workloads where general-purpose GPUs are inefficient, proving their commercial viability in niche but growing markets.

NVIDIA Dominates Datacenter GPU Market

This chart’s data on NVIDIA’s 92% market share directly visualizes the section’s statement that its GPUs are the ‘gold standard’ in a maturing training market.

(Source: IoT Analytics)

The Future is Efficient, Diverse, and Modular

Looking forward, the AI chip landscape will be defined by the urgent need for energy efficiency. The dominant theme is the end of the GPU monopoly and the rise of a diverse, specialized hardware ecosystem. We can expect to see a surge in “chiplet”-based designs, where specialized dies for AI, memory, and I/O are combined in a single package to optimize performance and power consumption—a strategy already being pursued by AMD and Intel. Performance-per-watt will replace raw performance as the most critical competitive metric, creating opportunities for novel architectures like photonics that promise radical improvements in energy efficiency. While NVIDIA‘s CUDA software platform provides a powerful moat, the strategic shift toward custom and specialized inference chips by its largest customers signals that the future of AI hardware will be far more fragmented, competitive, and relentlessly focused on sustainability.

AI Chip Market Forecasted for Growth

This chart projects massive growth for the AI chip market, providing context for the section’s forward-looking discussion on the urgent need for efficiency and diverse hardware solutions.

(Source: MobileAppDaily)

Frequently Asked Questions

Why is energy efficiency so important for new AI chips?

The massive energy consumption of data centers has become a primary bottleneck for scaling AI. As a result, performance-per-watt has replaced raw computational power as the critical benchmark. For large-scale operators, energy-efficient chips are essential for managing high operational costs, reducing environmental impact, and improving profitability.

Who are the main competitors challenging NVIDIA’s dominance?

The primary challenger is AMD, which offers competitive performance at a lower cost with its MI300X GPU. Additionally, hyperscalers like Google, Amazon, and Microsoft are developing their own custom in-house chips (TPUs, Inferentia, Maia) to optimize for their specific workloads. Finally, startups like Groq and Cerebras are creating highly specialized architectures for niche applications like low-latency inference.

What is the difference between chips for AI training versus AI inference?

AI training, the process of teaching a model, is extremely energy-intensive and still dominated by high-performance GPUs. AI inference, the process of running a trained model, is a less demanding but more frequent task. For inference, the market is more competitive and focused on energy efficiency and low cost, leading to the development of specialized, low-power chips.

What is the role of TSMC in the U.S. AI chip industry?

While the U.S. is the epicenter for AI chip design, it has a critical dependency on Taiwan’s TSMC for manufacturing. Nearly all major U.S. fabless companies, including NVIDIA, AMD, and Qualcomm, rely on TSMC to produce their most advanced and powerful chips, making TSMC a pivotal and powerful player in the entire AI hardware value chain.

What are custom ASICs and why are companies like Google and Microsoft developing them?

Custom ASICs (Application-Specific Integrated Circuits), such as Google’s TPU and Microsoft’s Maia 200, are chips designed for a single, specific purpose. These hyperscalers are developing them in-house to create hardware perfectly optimized for their proprietary AI services. This strategy gives them a significant advantage in performance-per-watt, reduces reliance on third-party vendors, and helps control the immense operational costs of running AI at scale.

Want strategic insights like this on your target company or market?

Build clean tech reports in minutes — not days — with real data on partnerships, commercial activities, sustainability strategies, and emerging trends.

Experience In-Depth, Real-Time Analysis

For just $200/year (not $200/hour). Stop wasting time with alternatives:

- Consultancies take weeks and cost thousands.

- ChatGPT and Perplexity lack depth.

- Googling wastes hours with scattered results.

Enki delivers fresh, evidence-based insights covering your market, your customers, and your competitors.

Trusted by Fortune 500 teams. Market-specific intelligence.

Explore Your Market →One-week free trial. Cancel anytime.

Related Articles

If you found this article helpful, you might also enjoy these related articles that dive deeper into similar topics and provide further insights.

- E-Methanol Market Analysis: Growth, Confidence, and Market Reality(2023-2025)

- Battery Storage Market Analysis: Growth, Confidence, and Market Reality(2023-2025)

- Bloom Energy SOFC 2025: Analysis of AI & Partnerships

- Carbon Engineering & DAC Market Trends 2025: Analysis

- Climeworks 2025: DAC Market Analysis & Future Outlook

Erhan Eren

Ready to uncover market signals like these in your own clean tech niche?

Let Enki Research Assistant do the heavy lifting.

Whether you’re tracking hydrogen, fuel cells, CCUS, or next-gen batteries—Enki delivers tailored insights from global project data, fast.

Email erhan@enkiai.com for your one-week trial.