AI’s Power Problem 2026: How Grid Constraints Threaten to Halt Key Applications

AI Adoption Risk: Why Grid Constraints Now Threaten Commercial AI Projects

Grid availability has transitioned from a logistical planning factor into the primary operational risk for the most computationally demanding AI applications, directly threatening project timelines and financial viability.

- Between 2021 and 2024, the industry focused on the projected growth in AI power consumption, with forecasts showing data center power demand tripling by 2030, driven by a 70% average annual increase for generative AI.

- Since 2025, this theoretical risk has become a practical barrier, with analysis showing 40% of existing AI data centers face operational constraints by 2027 due to power shortfalls.

- The most exposed applications are those requiring prolonged, uninterrupted power, such as foundational model training, where a single outage can corrupt multi-million dollar computations and waste gigawatt-hours of electricity.

- Other vulnerable categories now facing direct risk include hyperscale generative AI inference, large-scale digital twins, and autonomous systems development, all of which depend on massive, 24/7 compute loads that local grids cannot reliably support.

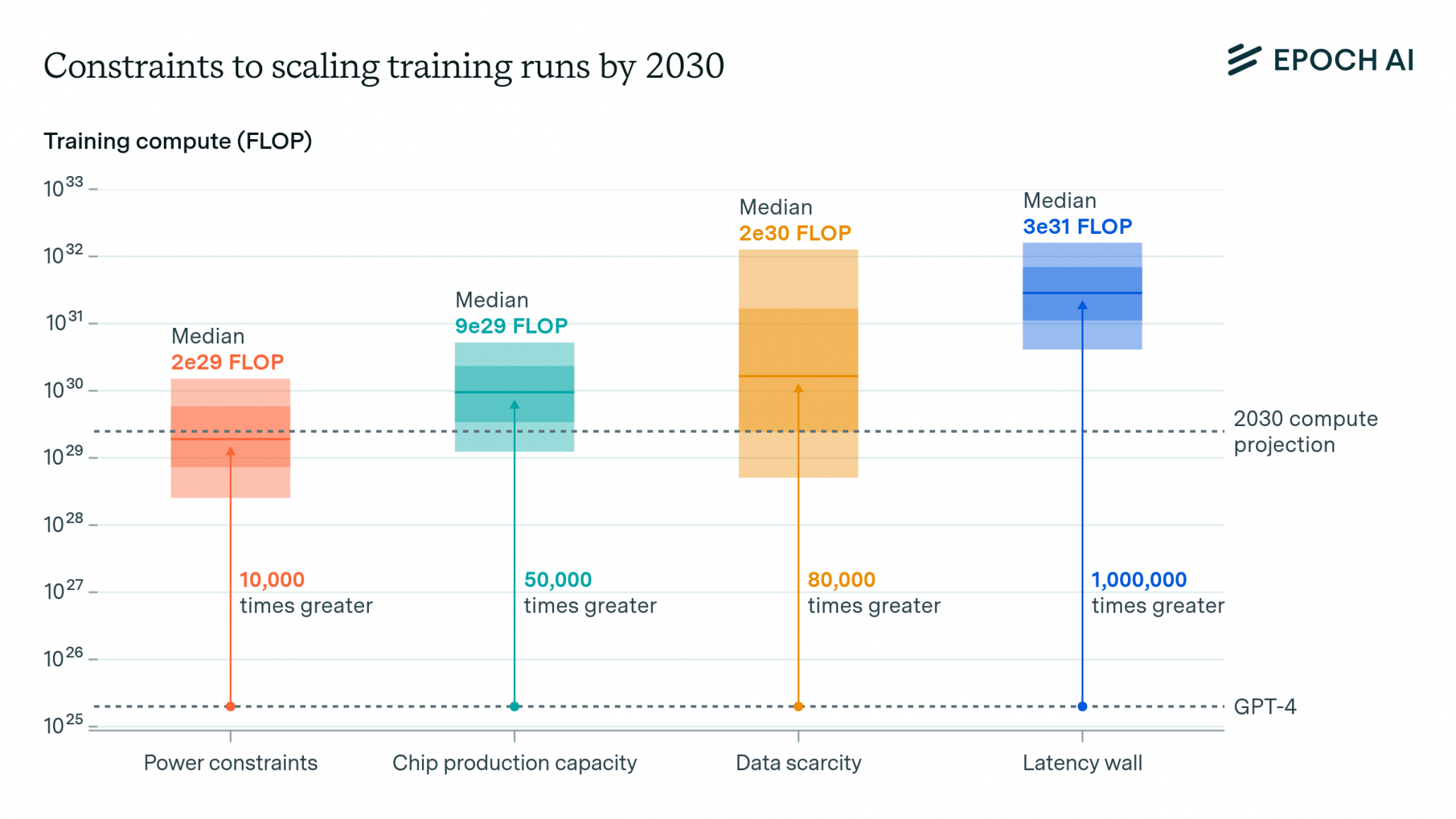

Power Constraints Are Top AI Scaling Bottleneck

This chart directly supports the section’s argument that grid constraints are the primary operational risk by identifying ‘Power constraints’ as the most significant bottleneck for scaling AI.

(Source: Epoch AI)

Investment Analysis: Capital vs. Kilowatts in the AI Infrastructure Race

Unprecedented capital investment in AI compute is colliding with the physical limits of power infrastructure, creating a new bottleneck where project success is dictated by access to electricity, not just funding.

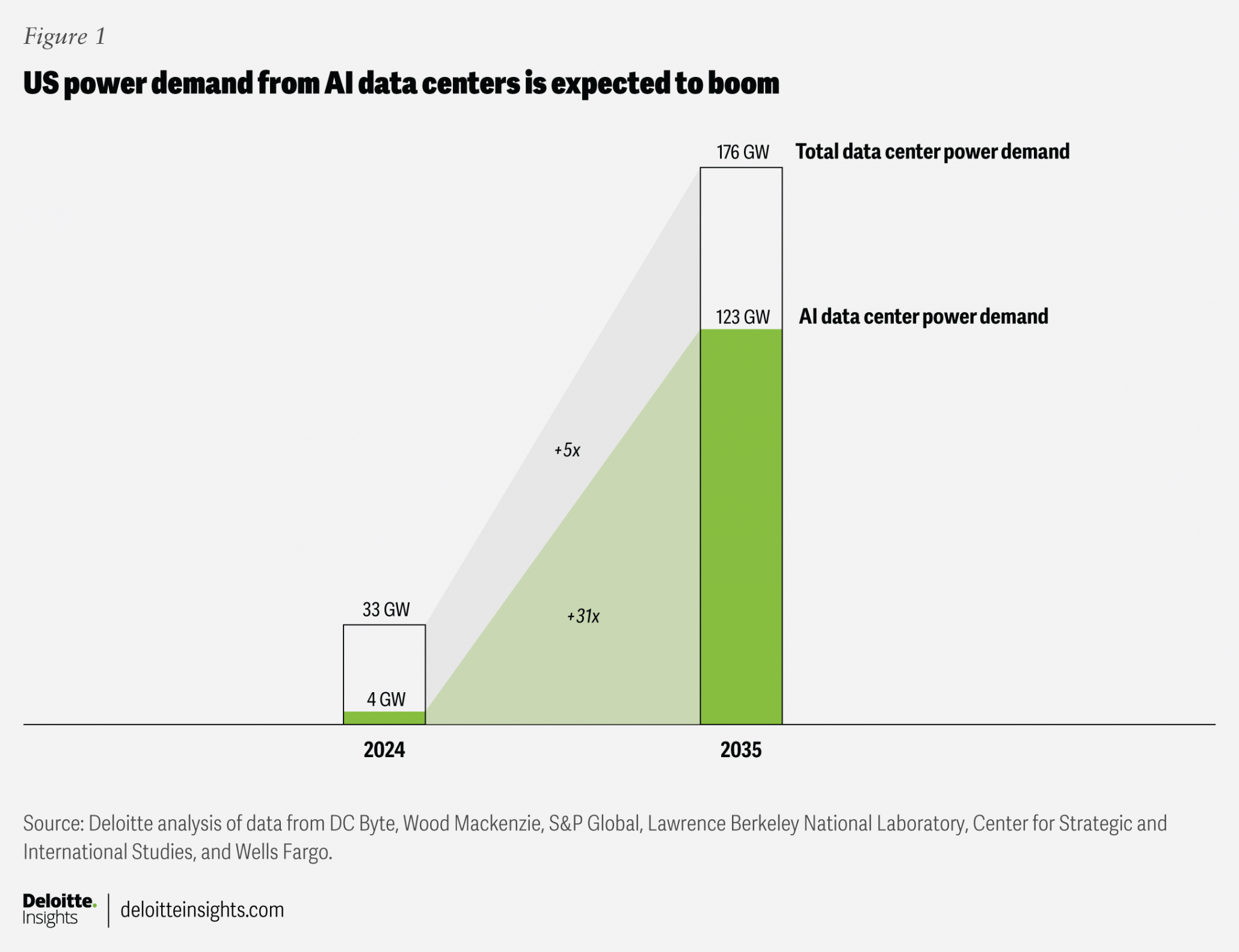

AI Power Demand Projected to Grow 31-Fold

The chart provides a specific data visualization for the Deloitte projection cited in the text, showing the exponential growth in power demand that is colliding with capital investment.

(Source: Deloitte)

- Mc Kinsey & Co. projects that AI-specific capital expenditures will reach $5.2 trillion by 2030, part of a $6.7 trillion total investment in data centers needed to meet demand.

- This investment is chasing a demand surge, with projections from Deloitte indicating a more than 30 x growth in U.S. AI data center power demand by 2035.

- The sheer scale of demand is evident in individual project requests, which can exceed 100 megawatts (MW), overwhelming local utility capacity and leading to project connection delays of five years or more in key markets.

- This mismatch between capital velocity and grid development creates significant stranded asset risk, where billion-dollar compute clusters cannot be fully utilized due to power unavailability.

Table: AI Infrastructure Power and Cost Projections

| Entity / Project | Time Frame | Details and Strategic Purpose | Source |

|---|---|---|---|

| Mc Kinsey & Co. | By 2030 | Projected AI-specific capital expenditure to reach $5.2 trillion. This signals the massive financial commitment to building out AI compute capacity. | GARP |

| Deloitte | By 2035 | Forecasts over 30 x growth in power demand from AI data centers in the U.S. This highlights the exponential demand curve that grids must accommodate. | Wall Street Journal |

| International Energy Agency (IEA) | By 2026 | Global data center electricity consumption projected to exceed 1, 000 TWh. This figure, equivalent to Japan’s total consumption, quantifies the global scale of the challenge. | IEA |

| Hanwha Data Centers | By 2030 | Projects a 165% growth in power demand for AI data centers from a 2025 baseline. This reflects near-term industry expectations for rapid expansion. | Hanwha Data Centers |

Geographic Analysis: How Grid Scarcity Is Reshaping the AI Data Center Map

The strategy for siting AI data centers is shifting from a focus on tax incentives and fiber connectivity to a primary search for available power, forcing development away from traditional hubs and into regions with energy surpluses.

Grid Capacity for AI Varies by Region

This chart directly relates to the section’s geographic analysis by showing which U.S. power grids have the most capacity for new AI data centers, explaining the shift in site selection.

(Source: Deloitte)

- From 2021 to 2024, data center development concentrated in established markets like Northern Virginia and Silicon Valley, leading to extreme geographic clustering of power demand.

- Since 2025, this concentration has created “grid-scale industrial loads” that have exhausted local capacity, resulting in widely reported connection moratoriums and project freezes in these primary markets.

- The International Energy Agency’s forecast of data center electricity use exceeding 1, 000 TWh by 2026 highlights the global nature of this challenge, impacting development in Europe and Asia as well.

- Consequently, developers are now prioritizing locations near large-scale power generation, including nuclear plants and renewable energy zones, fundamentally altering the geographic distribution of AI infrastructure.

Technology Maturity: AI Progress Now Paced by Power Delivery Innovation

The maturity of the most advanced AI applications is no longer solely dependent on algorithmic or semiconductor advances but is now directly gated by the technology and infrastructure for stable, high-density power delivery.

2021 Hype Cycle Shows Past AI Focus

This chart visualizes the technology-centric maturity focus of the 2021-2024 period mentioned in the text, providing a baseline to contrast with today’s power-gated progress.

(Source: FischerJordan)

- In the 2021-2024 period, technological maturity was measured by model size and processing speed, driven by new GPU architectures like the NVIDIA H 100.

- From 2025 onward, the critical technology challenge has expanded to power systems, as AI training clusters now create power load fluctuations of up to 70% within milliseconds, threatening to destabilize local grids.

- This has forced a technological pivot toward on-site power solutions, including battery storage, microgrids, and potentially small modular reactors, as a prerequisite for deploying next-generation compute hardware like the NVIDIA B 200.

- As a result, progress in foundational model training and large-scale simulation is now paced by the ability to secure and manage gigawatt-hour scale power, making energy engineering as crucial as software engineering.

SWOT Analysis: Grid Constraints and the Future of Power-Intensive AI

While power-intensive AI applications offer transformative economic and scientific value, their fundamental dependence on massive, stable electricity supply creates a critical vulnerability that now threatens to limit their growth and deployment.

High Energy Use Ranked as Top AI Concern

This chart quantifies the core ‘Weakness’ and ‘Threat’ discussed in the SWOT analysis, confirming that high energy use is the most significant perceived issue with AI infrastructure.

(Source: The Information)

- Strengths lie in the immense computational power driving breakthroughs in science and enterprise, backed by trillions in projected capital investment.

- The core Weakness is the extreme power density and requirement for 24/7 uninterrupted operation, which legacy grid infrastructure was not designed to support.

- Opportunities are emerging for vertically integrated solutions, including co-locating data centers with power generation and developing AI-driven grid management tools.

- The primary Threat is that grid connection delays and instability will create a hard ceiling on computational growth, stranding capital and delaying innovation in critical AI sectors.

Table: SWOT Analysis for Power-Intensive AI Applications

| SWOT Category | 2021 – 2024 | 2025 – Today | What Changed / Validated |

|---|---|---|---|

| Strengths | AI models like GPT-3 demonstrated massive performance gains through scaling. Focus was on algorithmic and compute hardware improvements (e.g., NVIDIA A 100/H 100). | Massive capital influx validated, with Mc Kinsey & Co. projecting $5.2 Trillion in AI CAPEX. Value proposition for HPC and Digital Twins solidified. | The economic and scientific justification for large-scale AI was proven, intensifying the demand for power and exposing the grid as the next bottleneck. |

| Weaknesses | Awareness grew that training large models was energy-intensive (GWh per model). The reliance on centralized GPU clusters was identified as a dependency. | The dependency became a critical vulnerability. Power fluctuations of up to 70% during training were documented, and the need for uninterrupted multi-week runs was established as a high-risk factor. | The weakness shifted from a theoretical energy cost to a direct operational failure point. A single power sag can now corrupt a multi-million dollar training run. |

| Opportunities | Discussion began around building data centers in cooler climates or near renewable sources to lower PUE and carbon footprint. | Active pursuit of on-site power generation (batteries, microgrids) to bypass grid constraints. AI is being explored as a tool to manage grid flexibility. | The opportunity evolved from passive efficiency gains to active energy independence. Companies are now forced to become energy players to secure compute growth. |

| Threats | The primary threat was seen as long-term supply chain issues for GPUs and the rising operational cost of electricity. Grid upgrades were seen as slow (5-10 year cycles). | The threat is immediate. Grid connection moratoriums and delays of 5+ years are now active policy in major markets. Analysis shows 40% of AI data centers will be power-constrained by 2027. | The threat transformed from a future cost concern into a present-day hard-stop on growth. The inability to get a grid connection is now a primary reason for project cancellation or delay. |

2026 Outlook: A Fork in the Road for AI Development and Infrastructure

If grid connection backlogs and capacity shortages are not resolved by 2026, watch for a strategic fragmentation of the AI industry, where developers of power-intensive models are forced into direct energy investments, while a parallel track focused on hardware efficiency and smaller models gains significant traction.

Datacenters Explore Alternative Power Sourcing Models

This diagram illustrates the strategic options, such as on-site generation and PPAs, that the section predicts AI developers will be forced to consider to overcome grid connection delays.

(Source: SemiAnalysis)

- If utilities continue to quote multi-year delays for new connections, expect to see major AI developers like Google, Microsoft, and Open AI accelerate investments in dedicated, on-site power generation.

- Watch for a sharp increase in the market valuation of companies specializing in energy-efficient AI hardware and model optimization techniques, as these become critical enablers for growth in a power-constrained environment.

- A key signal will be the location of new data center announcements. A shift away from traditional hubs toward regions with untapped power reserves will confirm that grid access has become the single most important factor in AI expansion.

- The primary consequence could be a slowdown in the race for ever-larger foundational models, as the cost and complexity of powering them become prohibitive, forcing a strategic pivot toward more sustainable and computationally less demanding AI architectures.

Frequently Asked Questions

Why has grid power suddenly become such a critical problem for AI?

The problem has escalated from a theoretical forecast to a practical barrier. While the industry projected massive power growth from 2021-2024, since 2025 this has become a reality. Analysis now shows 40% of AI data centers will face operational constraints by 2027, and new project requests exceeding 100 MW are facing connection delays of five years or more in key markets.

Which AI applications are most at risk from these power shortages?

The most exposed applications are those that require prolonged, uninterrupted power. This includes foundational model training, where a single outage can corrupt multi-million dollar computations, as well as hyperscale generative AI inference, large-scale digital twins, and autonomous systems development, which all depend on massive 24/7 compute loads that local grids cannot reliably support.

What does ‘stranded asset risk’ mean in the context of AI?

Stranded asset risk refers to the danger that billions of dollars invested in AI compute hardware cannot be fully utilized due to a lack of available electricity. With companies planning massive, billion-dollar compute clusters, the inability to secure a power connection from the grid means this expensive equipment could sit idle, becoming a ‘stranded asset’ that fails to generate a return.

How is the search for power changing where new AI data centers are being built?

The primary factor for siting new data centers has shifted from tax incentives or fiber connectivity to the availability of power. This is forcing developers away from traditional, now-congested hubs like Northern Virginia and Silicon Valley. Instead, they are prioritizing new locations with energy surpluses, such as regions near large-scale nuclear plants and renewable energy zones.

What is the 2026 outlook for AI if these grid constraints continue?

If grid connection delays and capacity shortages are not resolved by 2026, the AI industry may split into two tracks. Major developers of power-intensive models may be forced to invest directly in their own on-site power generation. Simultaneously, a parallel track focused on energy-efficient hardware and smaller, less demanding AI models could gain significant traction as a more sustainable path for growth.

Experience In-Depth, Real-Time Analysis

For just $200/year (not $200/hour). Stop wasting time with alternatives:

- Consultancies take weeks and cost thousands.

- ChatGPT and Perplexity lack depth.

- Googling wastes hours with scattered results.

Enki delivers fresh, evidence-based insights covering your market, your customers, and your competitors.

Trusted by Fortune 500 teams. Market-specific intelligence.

Explore Your Market →One-week free trial. Cancel anytime.

Related Articles

If you found this article helpful, you might also enjoy these related articles that dive deeper into similar topics and provide further insights.

- E-Methanol Market Analysis: Growth, Confidence, and Market Reality(2023-2025)

- Battery Storage Market Analysis: Growth, Confidence, and Market Reality(2023-2025)

- Climeworks 2025: DAC Market Analysis & Future Outlook

- Carbon Engineering & DAC Market Trends 2025: Analysis

- Climeworks- From Breakout Growth to Operational Crossroads

Erhan Eren

Ready to uncover market signals like these in your own clean tech niche?

Let Enki Research Assistant do the heavy lifting.

Whether you’re tracking hydrogen, fuel cells, CCUS, or next-gen batteries—Enki delivers tailored insights from global project data, fast.

Email erhan@enkiai.com for your one-week trial.