AI Power Consumption: Why Data Center Expansion Will Strain Global Grids in 2026

AI Workload Power Demand Reshapes Data Center Construction and Grid Risk

The industry has shifted from managing incremental power increases for AI workloads between 2021 and 2024 to confronting a step-change in facility-level power requirements from 2025 onward, creating significant grid integration risk and redefining data center design. This transition is not one of degree but of kind, moving the primary constraint on AI growth from silicon performance to physical power availability.

- In the period from 2021 to 2024, AI infrastructure growth was characterized by rising chip-level power consumption, exemplified by the move from the NVIDIA A 100 GPU with a 400-watt Thermal Design Power (TDP) to more powerful successors. While significant, this was largely managed within existing data center power and cooling envelopes.

- Since January 1, 2025, the paradigm has shifted to extreme power density at the rack level. While traditional server racks consume 5-15 k W, new AI-optimized racks now demand between 30 k W and over 110 k W, an order-of-magnitude increase that makes traditional air cooling obsolete and strains facility power distribution.

- This densification directly translates to an explosion in facility-level demand. Before 2024, a large data center might require 10-20 MW. Today, new AI-ready sites are being designed for 100-300 MW, with hyperscale campuses planned for 1 gigawatt, equivalent to the power consumption of a small city.

- Consequently, grid availability has replaced real estate as the primary bottleneck for new data center deployment. The ability to secure sufficient power from local utilities is now the critical path item, a risk that was secondary in the previous era of less-intensive computing workloads.

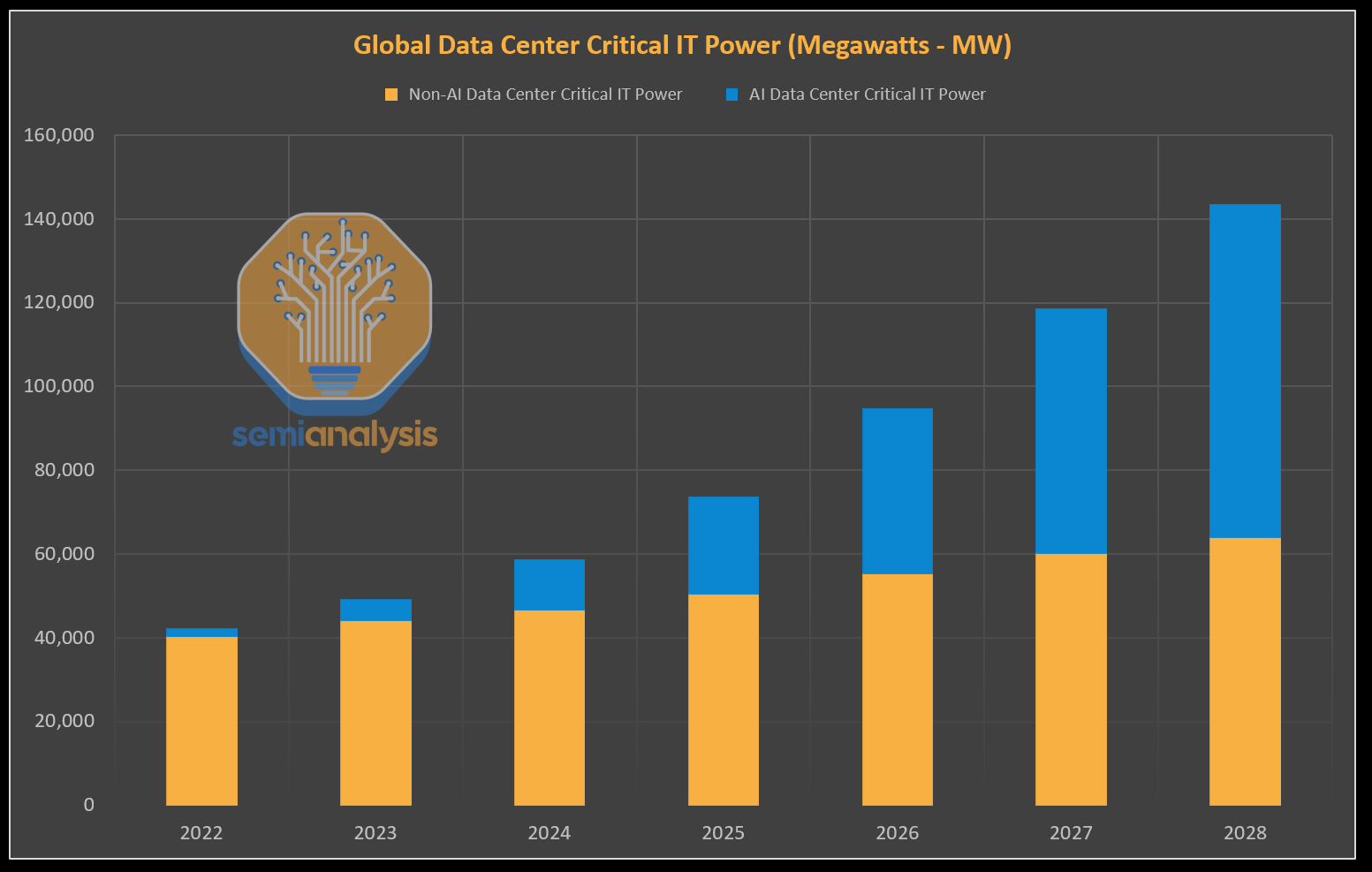

AI Power Demand Accelerates Post-2025

This chart illustrates the step-change in power requirements discussed in the section, showing a significant acceleration in global data center power demand driven by AI starting in 2025.

(Source: SemiAnalysis)

Unprecedented Capital Inflow Signals Massive AI Infrastructure Buildout

Financial forecasts and committed capital expenditures since the beginning of 2025 confirm that the projected growth in AI power consumption is driving a multi-trillion-dollar infrastructure buildout, validating the scale of future energy demand. This investment cycle is focused not just on chips but on the fundamental power and cooling systems required to operate them at scale.

- Mc Kinsey estimates that nearly $7 trillion in global investment will be required for data center infrastructure by 2030 to accommodate the demands of AI workloads, underscoring the enormous capital needed for both new construction and retrofitting existing facilities.

- In 2025 alone, Big Tech companies are projected to invest over $400 billion in AI infrastructure, a figure that encompasses servers, networking, and the massive power and cooling systems they require. This level of spending signals confidence in continued AI expansion.

- These investments are directly supported by demand forecasts from financial institutions. Goldman Sachs Research projects that global power demand from data centers will rise by 165% by the end of the decade, a growth trajectory that necessitates immediate and substantial infrastructure investment.

- The International Energy Agency (IEA) further reinforces this trend, forecasting that data center electricity demand will more than double to approximately 945 terawatt-hours (TWh) by 2030, driven almost entirely by the proliferation of AI.

Table: Forecasts for AI-Driven Infrastructure and Power Demand

| Institution / Forecast | Time Frame | Details and Strategic Purpose | Source |

|---|---|---|---|

| Mc Kinsey | By 2030 | Estimates a global investment need of nearly $7 trillion for data center infrastructure. This capital is required to build the physical capacity to house and power next-generation AI hardware. | Beyond the Bubble: Why AI Infrastructure Will Compound … |

| Big Tech Companies | 2025 | Projected to spend over $400 billion on AI infrastructure in a single year. This aggressive spending is aimed at securing a competitive advantage in AI model training and inference capabilities. | 10 Artificial Intelligence (AI) Infrastructure Stocks to Buy for … |

| Goldman Sachs Research | By 2030 | Forecasts a 165% increase in data center power demand. This projection validates the thesis that AI workloads are fundamentally altering the energy consumption profile of the digital economy. | AI to drive 165% increase in data center power demand by … |

| International Energy Agency (IEA) | By 2030 | Projects data center electricity demand will surpass 945 TWh. This highlights the growing global impact of AI on energy systems and the need for coordinated planning between tech and energy sectors. | AI is set to drive surging electricity demand from data … |

U.S. Leads AI Power Demand Surge, Highlighting Regional Grid Concentration

While AI development is a global phenomenon, the United States has emerged as the clear epicenter of AI-driven electricity demand growth, creating acute, concentrated strain on specific regional power grids. This geographic concentration of power-hungry data centers transforms a national trend into a series of localized infrastructure crises.

U.S. Data Center Power Use to Double

Supporting the section’s focus on the U.S. as the epicenter of demand, this chart projects that electricity consumption by U.S. data centers will more than double by 2030.

(Source: Pew Research Center)

- Before 2024, the global footprint of data centers was more geographically dispersed, with power requirements growing at a manageable pace across different regions.

- Since 2025, the U.S. has become the focal point of hyperscale AI buildouts. A forecast from Bloom Energy indicates that U.S. data centers could consume between 8% and 12% of the nation’s total electricity by 2030, a dramatic increase from just 3-4% today.

- IEA data further illustrates this concentration, projecting U.S. data center electricity consumption to rise from 108 TWh in 2020 to 426 TWh by 2030. This exponential growth is overwhelmingly driven by the power requirements of AI.

- This trend creates severe geographic hotspots in areas with established fiber optic and data infrastructure, such as Northern Virginia, Silicon Valley, and other emerging hubs. In these locations, the rapid construction of 100+ MW data centers is outstripping the ability of local utilities to permit and build new generation and transmission capacity.

AI Infrastructure Technology Moves From Chip-Level to System-Level Constraints

The central technological challenge of powering AI has matured from optimizing individual chip performance, the focus of the 2021–2024 period, to solving systemic power delivery and cooling constraints at the rack and facility level from 2025 onward. The bottleneck for AI advancement is no longer just processing power but the physical infrastructure that supports it.

AI Rack Power Density Growth Visualized

This chart visualizes the section’s theme of a shift to system-level constraints by forecasting a dramatic increase in the power density required for a single AI server rack.

(Source: AI Supremacy)

- Between 2021 and 2024, the primary technological narrative was chip innovation, exemplified by the generational leap from the 400 W TDP of the NVIDIA A 100 to the 700 W TDP of the H 100. This was a race for computational density on the silicon itself.

- From 2025 to today, the problem has scaled up to the system level. The industry is now grappling with how to power and cool server racks that consume between 50 k W and 150 k W, a load that renders traditional data center air-cooling designs ineffective and hazardous.

- This has forced the rapid commercialization and adoption of advanced solutions like direct-to-chip liquid cooling, which were considered niche or experimental before 2024 but are now becoming a baseline requirement for new high-density AI deployments.

- The maturity of AI hardware is no longer defined by the performance of a single processor but by the ecosystem’s ability to deliver and dissipate power at a massive scale. This validates that the primary engineering challenge has migrated from the chip to the surrounding facility infrastructure.

SWOT Analysis: Grid Integration and Power Scaling for AI Workloads

AI’s immense computational strength is inextricably linked to its primary weakness in power consumption, creating a dual-edged scenario: it opens massive market opportunities for energy infrastructure development while simultaneously posing significant threats to grid stability and the pace of AI deployment itself.

GPU Performance Creates Intense Power Spikes

This chart visualizes the core SWOT trade-off, showing how the computational strength of GPUs results in a weakness: significantly higher and more intense peak power demand compared to traditional CPUs.

(Source: NVIDIA Blog)

- Strengths in computational throughput are directly causing Weaknesses in power density.

- These weaknesses create unprecedented Opportunities for investment in new energy and cooling infrastructure.

- Failure to capitalize on these opportunities elevates the Threats from a business-level concern to a systemic risk for both the tech and energy sectors.

Table: SWOT Analysis for AI Workload Power and Grid Integration

| SWOT Category | 2021 – 2023 | 2024 – 2025 | What Changed / Resolved / Validated |

|---|---|---|---|

| Strength | Growing parallel processing capability via GPUs like the NVIDIA A 100 enabled larger AI models. | Unmatched computational throughput from accelerators with TDPs reaching 700 W (NVIDIA H 100) allows for training and deploying foundation models. | The market validated that raw performance, despite its energy cost, is the primary competitive driver in AI, justifying massive power consumption. |

| Weakness | High power consumption per chip (e.g., 400 W) was a recognized but manageable operational expense. | Extreme power density at the rack level (up to 110+ k W) and facility level (100+ MW) overwhelms existing power distribution and cooling infrastructure. | The problem of power consumption scaled from a chip-level efficiency issue to a systemic, facility-wide infrastructure crisis. |

| Opportunity | A growing market for more efficient server components and power supplies to reduce operational costs in data centers. | A multi-trillion-dollar market for new grid-scale power generation, transmission, and advanced liquid cooling technologies, as estimated by Mc Kinsey ($7 trillion by 2030). | The market opportunity expanded from component-level sales to full-scale, long-term energy infrastructure projects and utility partnerships. |

| Threat | Rising electricity bills and operational costs for data center operators. | Grid instability, regional power shortages, and significant delays in AI deployment due to multi-year grid connection queues and a lack of available power. | The threat evolved from a company-level financial concern to a national-level challenge involving energy security and economic competitiveness. |

Forward Outlook: AI Growth Hinges on Coordinated Energy Infrastructure Investment

If utilities, regulators, and data center operators fail to accelerate co-investment in new power generation and transmission capacity, the expansion of artificial intelligence will be constrained not by technological innovation but by the physical availability of electricity. The most critical signal to monitor in the year ahead is the pace at which new energy projects are successfully permitted and connected to serve AI data centers.

Power Availability Is AI’s Key Bottleneck

Reinforcing the section’s forward outlook, this chart identifies power availability as the most significant bottleneck limiting the future growth of AI compute, underscoring the risk of inaction.

(Source: Epoch AI)

- If this happens: Hyperscalers like Microsoft, Google, and Amazon Web Services continue to announce and develop gigawatt-scale data center campuses. Watch this: A corresponding surge in long-term Power Purchase Agreements (PPAs) and direct utility partnerships for the construction of new power plants, including renewables, natural gas, and potentially Small Modular Reactors (SMRs), specifically dedicated to these data centers.

- If this happens: Permitting and construction timelines for new high-voltage transmission lines continue to lag behind the rapid pace of data center build-outs. Watch this: An increase in reports of major data center projects being delayed, downsized, or cancelled entirely due to multi-year waits in grid connection queues, particularly in high-demand regions.

- These could be happening: The market for on-site, behind-the-meter power generation at data center facilities will accelerate as a primary strategy to bypass grid constraints and ensure operational uptime. This confirms that grid reliability is seen as a major business risk.

- These could be happening: Energy consumption per query (e.g., 3.0 Wh for an AI query versus 0.3 Wh for a standard search) will become a key competitive metric for AI model providers. This will drive investment in algorithmic efficiency and specialized hardware to reduce operational costs and manage power demands.

Frequently Asked Questions

Why is AI’s power consumption suddenly a major problem for grids?

The issue has shifted from incremental increases in chip power to a ‘step-change’ in facility-level demand since 2025. While traditional server racks use 5-15 kW, new AI racks demand 30-110 kW. This has led to new data centers being designed for 100-300 MW or even 1 gigawatt, making grid power availability, not real estate, the primary bottleneck for expansion.

How much is the power demand from data centers expected to grow because of AI?

The growth is significant. Goldman Sachs Research forecasts a 165% increase in global data center power demand by 2030. Similarly, the International Energy Agency (IEA) projects that electricity demand from data centers will more than double to approximately 945 terawatt-hours (TWh) by 2030, driven almost entirely by AI.

How much money is being invested to support this AI infrastructure buildout?

A massive amount of capital is being deployed. According to the article, McKinsey estimates that nearly $7 trillion in global investment will be needed for data center infrastructure by 2030. In the year 2025 alone, Big Tech companies are projected to spend over $400 billion on the necessary servers, networking, and power systems.

Is this increased power demand a global issue, or is it concentrated in specific areas?

While AI is a global phenomenon, the power demand surge is highly concentrated in the United States, which has become the epicenter. Forecasts indicate that U.S. data centers could consume 8-12% of the nation’s total electricity by 2030, up from 3-4% today. This creates acute strain on specific regional grids in hubs like Northern Virginia and Silicon Valley.

What is the biggest technological challenge for powering AI now compared to a few years ago?

The challenge has scaled up from the individual chip to the entire facility. From 2021-2024, the focus was on managing chip-level power (e.g., a 400W GPU). Since 2025, the primary challenge is systemic: how to deliver power to and cool entire server racks consuming over 100 kW. This has made advanced solutions like direct-to-chip liquid cooling a baseline requirement.

Experience In-Depth, Real-Time Analysis

For just $200/year (not $200/hour). Stop wasting time with alternatives:

- Consultancies take weeks and cost thousands.

- ChatGPT and Perplexity lack depth.

- Googling wastes hours with scattered results.

Enki delivers fresh, evidence-based insights covering your market, your customers, and your competitors.

Trusted by Fortune 500 teams. Market-specific intelligence.

Explore Your Market →One-week free trial. Cancel anytime.

Related Articles

If you found this article helpful, you might also enjoy these related articles that dive deeper into similar topics and provide further insights.

- E-Methanol Market Analysis: Growth, Confidence, and Market Reality(2023-2025)

- Battery Storage Market Analysis: Growth, Confidence, and Market Reality(2023-2025)

- Carbon Engineering & DAC Market Trends 2025: Analysis

- Climeworks 2025: DAC Market Analysis & Future Outlook

- Climeworks- From Breakout Growth to Operational Crossroads

Erhan Eren

Ready to uncover market signals like these in your own clean tech niche?

Let Enki Research Assistant do the heavy lifting.

Whether you’re tracking hydrogen, fuel cells, CCUS, or next-gen batteries—Enki delivers tailored insights from global project data, fast.

Email erhan@enkiai.com for your one-week trial.