AI’s Power Problem: How Grid and Cooling Constraints Are Bottlenecking Growth in 2025

AI Adoption Risk 2025: How Power and Cooling Bottlenecks Stall Commercial Projects

The primary barrier to Artificial Intelligence adoption has shifted from semiconductor availability to the physical constraints of power and cooling, creating systemic risks that delay projects and render legacy infrastructure obsolete. Between 2021 and 2024, the central challenge was securing scarce and expensive GPUs from providers like NVIDIA. In 2025, the problem has cascaded downstream; even organizations with access to advanced chips face an inability to deploy them at scale due to inadequate power infrastructure and thermal management limitations.

- The power density of AI hardware now physically exceeds the capacity of traditional data centers. Rack power densities have surged from a typical 15 k W to over 100 k W for AI workloads, with projections reaching 500 k W within two years, a level that conventional air cooling cannot manage.

- This thermal barrier forces a mandatory and costly transition to advanced liquid cooling, but the supply chain for this critical technology is unprepared. A recent survey revealed that 83% of data center experts believe the supply chain cannot deliver the required cooling solutions at the necessary scale, creating a new and immediate deployment bottleneck.

- The combination of massive electricity demand and inefficient heat dissipation dramatically increases the total cost of ownership. Cooling alone can represent 40% of a data center’s electricity usage, making many large-scale AI applications economically prohibitive for all but the largest hyperscalers.

- These physical constraints directly delay AI adoption, as companies are forced into a queue for limited space in new, liquid-cooled facilities or must settle for smaller, less capable models that can run on existing infrastructure.

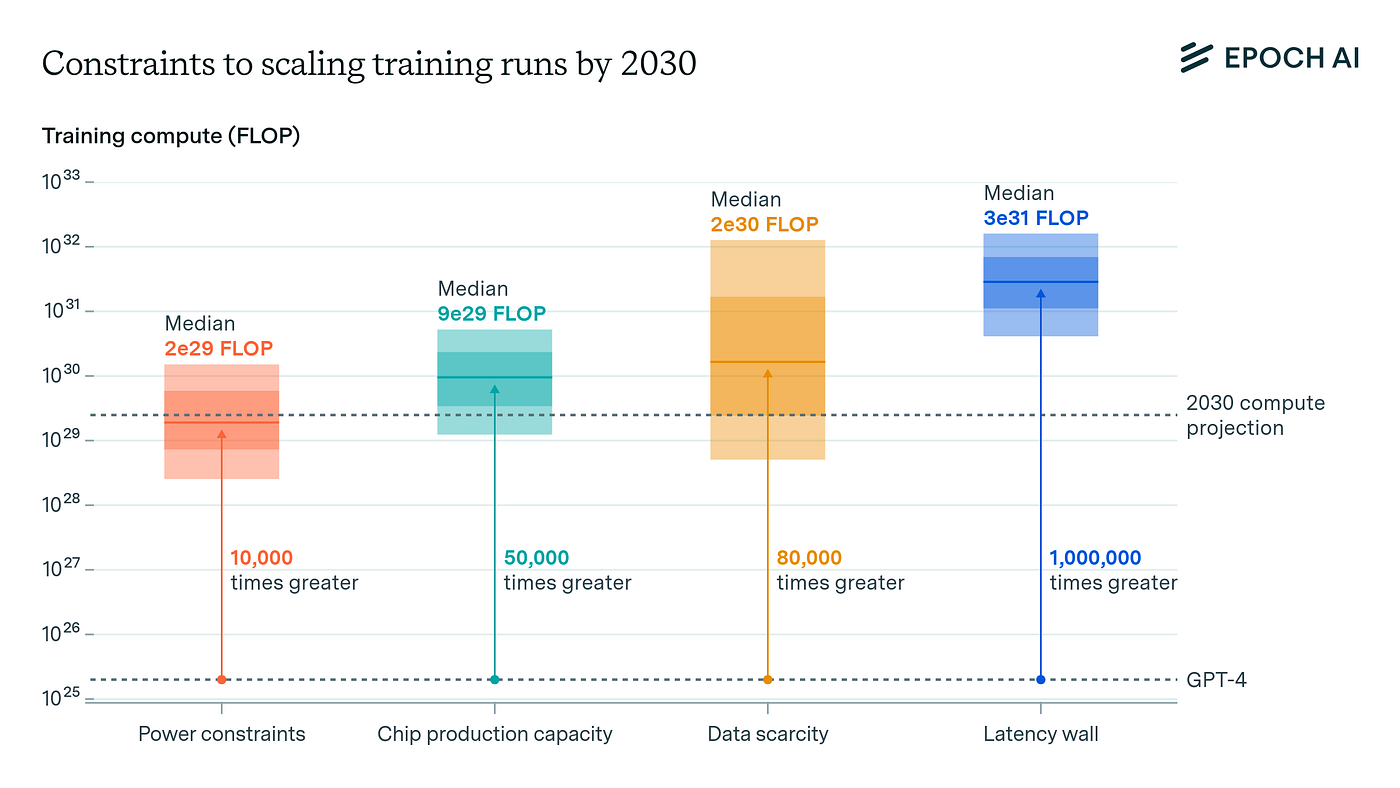

Power Availability Is AI’s Top Scaling Bottleneck

This chart quantifies power constraints as the most significant bottleneck for scaling AI compute, directly supporting the section’s argument that the primary risk has shifted to physical infrastructure.

(Source: Medium)

AI Infrastructure Investment Shifts: Capital Follows Power and Cooling Bottlenecks

Investment in the AI ecosystem is pivoting away from a primary focus on software and models toward the capital-intensive physical infrastructure required to power and cool AI compute. The market now recognizes that the most significant economic value and risk lie in controlling the physical layers of the AI stack, prompting a strategic reallocation of capital into energy, utilities, and specialized construction.

Investment Surges into Data Center Construction

This chart shows the massive capital reallocation into physical infrastructure, visualizing the sharp increase in data center construction spending that is central to the section’s theme.

(Source: MacroMicro)

- Capital formation has shifted from venture-led funding of AI startups to massive infrastructure funds targeting the physical bottlenecks. This is demonstrated by the formation of the AI Infrastructure Partnership (AIP) by firms like Black Rock and Microsoft, which aims to drive investment in data centers and their supporting power systems.

- Investment is increasingly directed at the energy value chain itself, a marked change from the 2021-2024 period. In October 2025, Brookfield and Bloom Energy announced a $5 billion strategic partnership explicitly to develop resilient power solutions for large AI data centers.

- This trend extends beyond direct power generation to the raw materials required for grid expansion. The value chain focus has broadened to include natural gas producers, turbine makers, and copper miners, as investors anticipate the massive build-out needed to support projected AI power demand.

- Hyperscalers are now behaving like utility companies to secure their growth. Google’s $20 billion renewable energy building spree, announced in December 2024, is a direct response to the threat that grid limitations pose to its AI ambitions.

Table: Major AI Infrastructure Investments (2025-Present)

| Partner / Project | Time Frame | Details and Strategic Purpose | Source |

|---|---|---|---|

| Brookfield, Qatar Investment Authority | Dec 2025 | $20 Billion strategic partnership to invest in AI infrastructure in Qatar, a region with significant energy resources. This move signals a global strategy to co-locate AI compute with available power. | Brookfield and Qai Form $20 Billion Strategic Investment … |

| Brookfield, Bloom Energy | Oct 2025 | $5 Billion partnership to finance and deploy resilient power solutions for AI data centers. This directly targets the power availability bottleneck by creating dedicated, on-site generation capacity. | Brookfield and Bloom Energy Announce $5 Billion … |

| Hypertec, 5 C, Together AI | Jun 2025 | Up to $5 Billion strategic alliance to build out AI infrastructure in Europe. This initiative aims to address the continent’s need for sovereign compute capacity by tackling physical infrastructure gaps. | Hypertec Expands into Europe forming Strategic AI Alliance |

| Black Rock, GIP, Microsoft, MGX, NVIDIA, x AI | Mar 2025 | Formation of the AI Infrastructure Partnership (AIP) to drive investment in and expand capacity of AI data centers and supporting power infrastructure, acknowledging that compute and power are inseparable problems. | AI Infrastructure Partnership – Black Rock |

AI’s Geographic Shift: How Power Availability Dictates Data Center Locations

The geography of AI development is being redrawn by the search for power, funneling new data center construction away from traditional tech hubs toward regions with available and affordable energy. While proximity to users and fiber networks was a key factor between 2021 and 2024, power availability has become the dominant siting criterion in 2025, creating new regional opportunities and risks.

- Established data center markets like Northern Virginia are now facing significant constraints due to grid limitations and community opposition. In July 2025, reports highlighted growing resident pushback against new data center projects, demonstrating that social and regulatory factors are compounding grid-level bottlenecks.

- This has initiated a geographic shift toward energy-rich regions that can support AI’s massive power demand. For example, Alberta, Canada, began actively promoting its abundant natural gas reserves as a power source for Silicon Valley’s AI needs in October 2024.

- Access to renewable energy is becoming a key competitive differentiator, driving site selection to areas with favorable solar or wind profiles. Google’s move to invest $20 billion in renewable projects underscores a strategy to align its data center expansion with green energy availability, mitigating both cost and carbon risks.

- The global supply chain for chips remains geographically concentrated in places like Taiwan, but the deployment of those chips is becoming geographically distributed based on power. This creates a two-fold geographic risk: a manufacturing chokepoint in one region and a deployment bottleneck in another.

Cooling Technology Maturity: Liquid Cooling Shifts from Niche to Necessity for AI

The intense thermal output of AI accelerators has forced liquid cooling to transition from a niche, high-performance computing solution to a mainstream requirement for all new AI data centers. Between 2021 and 2024, air cooling was sufficient for the majority of enterprise data centers. As of 2025, the rise of GPUs generating over 1000 W of heat has rendered air cooling obsolete for frontier AI, making the adoption and scaling of liquid cooling a critical path technology.

High-Power Chips Force Shift to Liquid Cooling

The chart illustrates how rising chip power makes advanced liquid cooling a necessity, perfectly matching the section’s focus on this technological shift away from traditional air cooling.

(Source: semivision – Substack)

- The technology has reached a point of mandatory adoption due to physical limits. Racks exceeding 50 k W, now common in AI deployments, cannot be effectively cooled by air. This makes Direct-to-Chip (DTC) or immersion cooling a baseline technical specification, not an optional upgrade.

- While the need is mature, the industrial capacity to deliver these systems at scale is not. The supply chain for specialized components like coolant distribution units (CDUs), pumps, and compatible racks is a significant bottleneck, delaying the construction of AI-ready facilities.

- The industry recognized this gap during the 2021-2024 period, but the explosive growth of generative AI in 2025 outpaced the supply chain’s ability to respond. This lag between demand for liquid cooling and its availability is a primary factor constraining the build-out of new AI capacity.

- Other related technologies aim to address physical bottlenecks, but remain nascent. For example, companies like Ayar Labs are developing co-packaged optical I/O to overcome the “memory wall, ” but these solutions are not yet at mass scale and do not solve the immediate thermal challenge.

SWOT Analysis: Navigating the Physical Leash on AI Infrastructure

The AI infrastructure market is defined by a fundamental tension between exponential demand and linear, physically constrained supply chains for power and cooling. This dynamic creates significant opportunities for incumbents and infrastructure players but also introduces systemic risks that threaten to slow overall adoption and concentrate market power.

Defining the AI Technology Stack Layers

This diagram provides a foundational overview of the AI stack, clarifying the hardware and infrastructure layers that are the subject of the section’s introductory SWOT analysis.

(Source: AI Now Institute)

- Strengths have shifted from model performance to physical asset control, while Weaknesses have propagated from chip supply to the entire energy and construction ecosystem.

- Opportunities are emerging for companies that solve physical-world problems, but Threats related to resource scarcity and market concentration have intensified.

Table: SWOT Analysis for AI Physical Infrastructure Constraints

| SWOT Category | 2021 – 2023 | 2024 – 2025 | What Changed / Validated |

|---|---|---|---|

| Strengths | High demand for AI training; dominance of software and model developers; strong VC funding for AI startups. | Unprecedented demand for compute; massive capital influx from infrastructure funds (e.g., Black Rock, Brookfield); hyperscalers’ ability to vertically integrate and secure power. | The center of power shifted from software innovation to control over physical resources. Capital from large asset managers replaced VC funding as the primary driver. |

| Weaknesses | Primary bottleneck was semiconductor scarcity (NVIDIA GPUs) and high chip costs. | Cascading bottlenecks: inadequate grid capacity, long lead times for electrical gear (transformers), immature supply chains for liquid cooling, and skilled labor shortages for construction. | The problem expanded from a single component (chips) to a systemic failure of interdependent physical infrastructure layers to keep pace with demand. |

| Opportunities | Development of new AI models and applications; growth of cloud AI services. | Economic value migrates to energy producers, utilities, and makers of cooling systems (e.g., Bloom Energy). Forced innovation in energy-efficient hardware and smaller AI models. New geographic markets open based on energy availability. | The primary economic beneficiaries are no longer just software firms, but the entities that control the physical bottlenecks, validating a shift in investment strategy. |

| Threats | Geopolitical risk in the semiconductor supply chain (e.g., reliance on TSMC). | Concentration of power among hyperscalers who can afford the infrastructure. Project delays from community opposition and permitting. Risk of stranded assets as technology outpaces facility design. | Risks became more localized and operational. The threat is no longer just a supply disruption but an inability to build and operate infrastructure even when components are available. |

2026 Outlook: Will AI Growth Stall or Will Efficiency Innovations Prevail?

For the next 12-18 months, the pace of widespread AI adoption will be dictated not by algorithmic breakthroughs, but by the industry’s success in navigating the physical constraints of power and cooling. The most critical strategic action for investors and energy professionals is to monitor signals related to the build-out of new power generation and data center capacity, as this will determine whether AI growth continues its trajectory or fragments into a tiered market.

2026 AI Infrastructure Outlook: Power and Cooling

As a 2026 forecast, this chart highlights key future trends in liquid cooling and energy storage, directly addressing the section’s forward-looking question about efficiency innovations.

(Source: PR Newswire)

- If grid connection queues remain long and transformer lead times do not improve, watch for hyperscalers like Microsoft and Google to accelerate their direct investments in power generation, effectively becoming independent utilities to secure their AI roadmaps. This would further widen the gap between AI leaders and followers.

- If the liquid cooling supply chain scales rapidly and new, more energy-efficient chip architectures from NVIDIA, Intel, or others gain traction, these could be happening: a partial easing of the power-per-compute ratio, allowing more enterprises to deploy meaningful AI workloads in upgraded colocation facilities, and a slight deceleration in the growth of data center power demand.

- The key signal confirming these trajectories will be the progress of mega-partnerships like the AI Infrastructure Partnership (AIP). If these alliances successfully bring new, powered data center capacity online by late 2025 or early 2026, it will validate that the capital-intensive infrastructure bottleneck can be overcome. If they face significant delays, it will confirm that physical constraints are placing a hard ceiling on AI’s near-term growth potential.

Frequently Asked Questions

What is the biggest challenge for AI adoption in 2025, according to the article?

The primary barrier has shifted from securing scarce GPUs to the physical constraints of power and cooling. Even companies with advanced chips are unable to deploy them at scale because traditional data center infrastructure lacks the necessary power capacity and thermal management solutions to handle them.

Why can’t traditional data centers support the latest AI hardware?

Traditional data centers are not designed for the extreme power density of modern AI hardware. According to the article, rack power densities for AI have surged from a typical 15 kW to over 100 kW, a level that conventional air cooling cannot manage, rendering legacy infrastructure obsolete for frontier AI workloads.

How has investment in the AI industry changed recently?

Investment has pivoted away from a primary focus on AI software and models toward the capital-intensive physical infrastructure required to power and cool AI compute. Large infrastructure funds and asset managers, like Black Rock and Brookfield, are now investing billions directly into data centers, power generation, and the supporting energy value chain.

What specific technology, besides power grids, is creating a new bottleneck?

Advanced liquid cooling has become a major bottleneck. While the technology is now a mandatory requirement for cooling high-density AI racks, the article states that the supply chain for its components (like coolant distribution units and pumps) is unprepared to meet the explosive demand, delaying the construction of new AI-ready facilities.

How is the search for power changing the location of new AI data centers?

Power availability has become the dominant factor in deciding where to build new data centers. This is causing a geographic shift away from constrained traditional hubs like Northern Virginia and toward energy-rich regions. Companies and investors are now co-locating AI compute with available and affordable power sources, such as areas with natural gas reserves or strong renewable energy potential.

Experience In-Depth, Real-Time Analysis

For just $200/year (not $200/hour). Stop wasting time with alternatives:

- Consultancies take weeks and cost thousands.

- ChatGPT and Perplexity lack depth.

- Googling wastes hours with scattered results.

Enki delivers fresh, evidence-based insights covering your market, your customers, and your competitors.

Trusted by Fortune 500 teams. Market-specific intelligence.

Explore Your Market →One-week free trial. Cancel anytime.

Related Articles

If you found this article helpful, you might also enjoy these related articles that dive deeper into similar topics and provide further insights.

- E-Methanol Market Analysis: Growth, Confidence, and Market Reality(2023-2025)

- Battery Storage Market Analysis: Growth, Confidence, and Market Reality(2023-2025)

- Carbon Engineering & DAC Market Trends 2025: Analysis

- Climeworks 2025: DAC Market Analysis & Future Outlook

- Climeworks- From Breakout Growth to Operational Crossroads

Erhan Eren

Ready to uncover market signals like these in your own clean tech niche?

Let Enki Research Assistant do the heavy lifting.

Whether you’re tracking hydrogen, fuel cells, CCUS, or next-gen batteries—Enki delivers tailored insights from global project data, fast.

Email erhan@enkiai.com for your one-week trial.