HBM Supply Chain Risk 2026: Why Manufacturing Capacity is the Choke Point for AI Growth

HBM Adoption Risk: From Niche Component to Systemic AI Bottleneck

The rapid shift from High-Bandwidth Memory (HBM) as a specialized component to a mandatory requirement for all high-performance AI accelerators has created a systemic supply chain bottleneck, where manufacturing capacity, not chip design, now dictates the pace of AI infrastructure growth.

- Between 2021 and 2024, HBM was integrated into high-end hardware like NVIDIA‘s H 100 GPU and Intel‘s Xeon Max CPUs, primarily serving the advanced HPC and early AI training markets. Its adoption was a performance choice, competing with other memory types like GDDR 6.

- Starting in 2025, HBM became non-negotiable. Flagship accelerators like NVIDIA‘s Blackwell B 200 and AMD‘s MI 300 X were designed exclusively around HBM 3 E, demanding unprecedented volumes. This caused demand to far outstrip supply, with manufacturers like Micron reporting that their entire HBM production for 2025 was sold out before the year began.

- The market now defines AI accelerator performance by its HBM configuration, linking chip capability directly to memory bandwidth. An accelerator with 8 TB/s of memory bandwidth, enabled by HBM 3 E, is fundamentally superior for large model training, making the memory itself the primary performance-defining component.

- The emergence of custom AI chips from hyperscalers like Microsoft, with its Maia 200 accelerator, further intensifies supply pressure. These large customers are securing direct, often exclusive, supply agreements, which concentrates the limited available HBM capacity and leaves other market participants facing shortages.

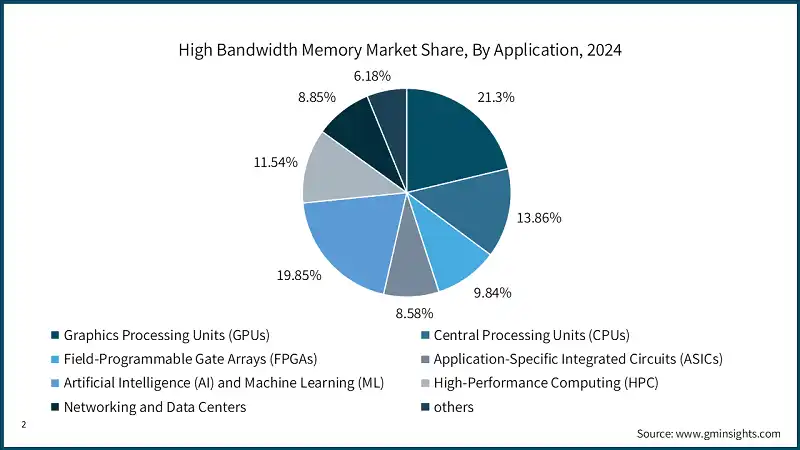

AI and GPUs Dominate HBM Demand

This chart shows that GPUs and AI are the largest applications for HBM in 2024, visually confirming the section’s point about HBM becoming a mandatory requirement for AI accelerators.

(Source: Global Market Insights)

Investment Analysis: HBM Manufacturers Commit Billions to Address Capacity Shortfall

In response to the severe supply-demand imbalance, the HBM oligopoly of SK hynix, Samsung, and Micron has initiated massive capital expenditure cycles, committing tens of billions of dollars to expand fabrication capacity, though these new facilities will take years to come online and alleviate the current market tightness.

- The scale of investment shifted dramatically after 2024. Prior activity focused on technology development, such as Samsung‘s HBM-PIM in 2021. From 2025 onward, investments pivoted to massive greenfield fab construction specifically for HBM.

- SK hynix has announced the most aggressive expansion, committing approximately $13 billion for a new plant and a separate $10 billion U.S.-based AI investment arm to solidify its supply chain and market leadership position.

- Micron is also expanding globally to meet demand and diversify its manufacturing footprint, planning a $9.6 billion HBM facility in Japan and a long-term $24 billion investment in a new fab in Singapore, directly targeting AI-driven demand.

- These investments confirm that the HBM shortage is not a short-term issue. The multi-year timelines for fab construction mean the current supply constraints, which are limiting AI hardware growth, will persist through at least 2026.

Table: Key Investments in HBM Manufacturing Capacity (2025-2026)

| Company / Project | Time Frame | Details and Strategic Purpose | Source |

|---|---|---|---|

| SK hynix | Announced Jan 2026 | Plans for a $10 billion AI-focused investment arm in the U.S. to secure its position in the American AI ecosystem and support its supply chain. | SK Hynix plans $10 billion AI investment arm in US |

| Micron Technology | Announced Jan 2026 | Broke ground on a new advanced wafer fabrication facility in Singapore, part of a long-term investment plan of approximately $24 billion over 10 years to meet future AI demand. | Micron Breaks Ground on Advanced Wafer Fabrication Facility … |

| SK hynix | Announced Jan 2026 | Announced a $13 billion investment in a new fabrication plant to address the severe memory chip shortage driven by AI demand and advanced packaging needs. | SK Hynix to invest $13 billion in new plant amid memory … |

| Micron Technology | Announced Nov 2025 | Plans to invest $9.6 billion in a new HBM plant in Hiroshima, Japan, to bolster its production capacity for AI memory chips. | Micron to Invest $9.6 Billion in Japan to Build AI Memory … |

Partnership Analysis: AI Leaders Forge Exclusive Alliances to Secure HBM Supply

To mitigate the risk of production delays, leading AI chip designers and hyperscalers are forming tight, often exclusive, partnerships with HBM manufacturers, creating a tiered market where those with secured supply can execute their roadmaps while others face significant uncertainty.

- Before 2024, partnerships were primarily technical collaborations. The dynamic shifted in 2025 to strategic supply agreements, where access to HBM became a critical competitive advantage.

- The relationship between NVIDIA and SK hynix solidified the latter’s market leadership. SK hynix became the key supplier for the HBM used in NVIDIA‘s market-defining accelerators, giving both companies a powerful advantage.

- In a signal of market tightening, Microsoft entered an exclusive supply agreement with SK hynix in January 2026 for HBM 3 E destined for its next-generation Maia 200 AI chip. This move locks up a significant portion of future HBM capacity for a single buyer.

- To counter the established leaders, other players are forming new alliances. In June 2025, Intel partnered with a Soft Bank subsidiary to form Saimemory, a startup focused on developing a power-efficient HBM substitute, signaling efforts to create alternative supply chains.

Table: Strategic Partnerships for HBM Supply and Development (2025-2026)

| Partner / Project | Time Frame | Details and Strategic Purpose | Source |

|---|---|---|---|

| NVIDIA & Samsung | Reported Feb 2026 | NVIDIA is reportedly urging Samsung to expedite its HBM 4 development to diversify its supply chain and meet future bandwidth requirements beyond HBM 3 E. | Exclusive: NVIDIA Urges Samsung to Expedite HBM 4 Amid … |

| Microsoft & SK hynix | Announced Jan 2026 | SK hynix became the exclusive supplier of HBM 3 E memory for Microsoft‘s upcoming Maia 200 AI accelerator, securing a critical supply line for its internal AI hardware efforts. | AI Race Boosts SK hynix as Sole Supplier for Microsoft’s … |

| Intel & Soft Bank | Reported Jun 2025 | The two companies collaborated to launch Saimemory, a startup focused on developing a more power-efficient stacked DRAM substitute for HBM, aiming to create an alternative to the current market oligopoly. | Intel and Soft Bank collaborate on power-efficient HBM … |

Geographic Concentration of HBM Manufacturing

HBM production and expertise are highly concentrated in Asia, particularly South Korea, creating significant geopolitical and supply chain risks for the global AI industry, though recent investments indicate a nascent effort to diversify manufacturing into the United States and Japan.

- Between 2021 and 2024, South Korea solidified its dominance in HBM manufacturing, with SK hynix and Samsung controlling the overwhelming majority of the market from their domestic fabs. This period established the region as the epicenter of HBM production.

- In 2025 and 2026, investment patterns began to show strategic geographic diversification. Micron announced a major $9.6 billion HBM plant in Hiroshima, Japan, strengthening Japan’s role in the advanced semiconductor supply chain.

- Signaling a major move to mitigate geopolitical risk, SK hynix announced plans in January 2026 to establish a $10 billion AI-focused investment arm in the U.S. This initiative is designed to build a stronger presence in the American market and support a more resilient supply chain.

- Despite these diversification efforts, the core intellectual property, advanced packaging integration, and mass production capacity for HBM remain centered in Asia, representing a critical vulnerability for Western AI hardware companies dependent on this supply.

Technology Maturity: HBM Shifts from R&D to Full-Scale Commercial Necessity

HBM technology has rapidly matured from a developing standard to a commercially scaled, indispensable product, with market demand now pulling the technology roadmap forward at an accelerated pace, as seen in the urgent industry-wide push for HBM 4.

- In the 2021-2024 period, the market was focused on the transition from HBM 2 E to HBM 3. Products like Samsung‘s HBM-PIM, announced in 2021, were innovative but represented developmental explorations of the technology’s potential.

- The period from 2025 onward is defined by the rapid, large-scale commercialization of HBM 3 E. This latest standard, offering over 1.2 TB/s of bandwidth, became the default for all new high-end AI accelerators, moving it from a next-generation technology to a current-generation commodity in short order.

- The pressure for more performance is already forcing the industry to accelerate the next standard. In February 2026, reports emerged that NVIDIA is actively pushing suppliers like Samsung to expedite HBM 4, which is expected to double the interface width to 2048-bits.

- This acceleration validates that HBM is no longer on a standard technology refresh cycle. Instead, its development is directly driven by the existential need of AI companies for more memory bandwidth to train ever-larger models, making its roadmap a critical dependency for the entire AI sector.

SWOT Analysis: HBM Market Dynamics and Constraints

The strategic importance of HBM has fundamentally reshaped its market profile, shifting the primary weakness from high cost to severe production scarcity, while the threat has evolved from competing technologies to the systemic risk of a constrained oligopolistic supply chain.

HBM Compared to Next-Generation Memory Technologies

This table compares HBM’s features against several alternatives, highlighting its core advantages and disadvantages, which directly informs the SWOT analysis discussed in the section.

(Source: www.mk.co.kr)

- Strengths have evolved from a performance advantage to an absolute necessity for competitive AI hardware.

- Weaknesses have shifted from economic (cost) to physical (manufacturing capacity).

- Opportunities have exploded from a niche market to a $100 billion projected industry.

- Threats are no longer external but internal to the supply chain’s structure and its inability to meet demand.

Table: SWOT Analysis for High-Bandwidth Memory (HBM) Market

| SWOT Category | 2021 – 2024 | 2025 – 2026 | What Changed / Resolved / Validated |

|---|---|---|---|

| Strengths | Offered superior bandwidth and power efficiency over GDDR, making it ideal for high-end GPUs like the NVIDIA H 100. | HBM’s performance is now indispensable for flagship accelerators (NVIDIA B 200, AMD MI 300 X). Its ultra-wide 1024-bit interface is the only viable solution for large AI models. | HBM’s architectural superiority was validated, transitioning it from a premium option to a mandatory component for high-performance AI compute. |

| Weaknesses | High cost and complex 2.5 D packaging integration with an interposer limited its adoption to only the most expensive devices. | Severe manufacturing capacity constraints across the oligopoly (SK hynix, Samsung, Micron). Production is sold out for 2025 and beyond, creating a major industry bottleneck. | The primary constraint shifted from cost to physical availability. The market is willing to pay a premium, but the supply does not exist. |

| Opportunities | Growth in niche markets like high-performance computing (HPC) and high-end graphics cards. Early AI adoption was a key growth driver. | Explosive, hyper-growth demand from generative AI. Market forecasts project growth from ~$4 B in 2023 to over $100 B by 2028, commanding high prices and profit margins. | Generative AI created a “supercycle” for HBM, transforming its market potential from incremental growth to an exponential expansion that defines the semiconductor sector. |

| Threats | Potential competition from alternative memory architectures or improvements in traditional GDDR memory. | The oligopolistic supply structure itself is the primary threat. An inability to scale production fast enough will directly stifle AI hardware growth globally. Geopolitical risks associated with Asian-centric manufacturing. | The main threat is no longer external competition but the internal failure of the HBM supply chain to meet demand, creating systemic risk for the entire AI industry. |

Scenario Modelling: HBM Supply Constraints May Bifurcate the AI Market

If HBM manufacturers cannot scale production to meet the projected 40% compound annual growth rate demanded by the AI industry, the market will likely bifurcate, with well-supplied players like NVIDIA and major hyperscalers dominating the high-end training market while other companies are forced toward less performant or alternative memory solutions for lower-end tasks.

- If this happens: The current supply bottleneck for HBM 3 E worsens through 2026 as new fabs from SK hynix and Micron are still under construction.

- Watch this: The price premium for HBM will continue to rise, and lead times for AI accelerators will remain extended. Watch for announcements of new, exclusive supply deals between chip designers and HBM suppliers, which will further concentrate the available capacity.

- These could be happening: A secondary market for AI hardware could emerge, where companies with secured supply lease out their computational resources at a premium. Concurrently, R&D into HBM alternatives like High-Bandwidth Flash (HBF) from San Disk will accelerate, not as a direct replacement, but as a “good enough” solution for inference workloads where capacity is more critical than HBM’s write performance and latency. This would create a distinct performance and cost segmentation in the AI accelerator market.

Frequently Asked Questions

Why is there a sudden shortage of HBM for AI chips?

According to the analysis, HBM has rapidly transitioned from a high-end option to a mandatory, non-negotiable component for all new high-performance AI accelerators like NVIDIA’s B200 and AMD’s MI300X. This universal adoption, driven by the need for extreme memory bandwidth to train large AI models, caused demand to suddenly and massively outstrip the limited global manufacturing supply. For example, Micron reported its entire 2025 HBM production was sold out before the year even started.

What is being done to solve the HBM manufacturing bottleneck?

The three main HBM manufacturers—SK hynix, Samsung, and Micron—have committed tens of billions of dollars to expand production. Key investments include SK hynix’s $13 billion for a new fabrication plant and Micron’s plans for a $9.6 billion facility in Japan and a long-term $24 billion investment in Singapore. These projects are focused on building new factories specifically to address the HBM capacity shortfall.

When will the HBM supply shortage likely end?

The report suggests the shortage will not be resolved quickly. The massive investments in new fabrication plants have multi-year construction timelines. Because these new facilities will take years to come online, the analysis concludes that the current supply constraints limiting AI hardware growth are expected to persist through at least 2026.

How are major companies like NVIDIA and Microsoft navigating this supply shortage?

Leading AI companies are forming tight, strategic partnerships to secure their supply. The report highlights that NVIDIA has a strong relationship with SK hynix. In a more direct move, Microsoft entered an exclusive supply agreement with SK hynix in January 2026 to procure HBM3E for its Maia 200 accelerator. These exclusive deals lock up future HBM capacity for a few large players, giving them a significant competitive advantage.

What is the biggest threat to the HBM market today?

The primary threat has shifted from external competition to the supply chain itself. Previously, the risk was from alternative memory technologies. Now, as the SWOT analysis indicates, the main threat is the “internal failure of the HBM supply chain to meet demand.” The oligopolistic structure and its inability to scale production fast enough pose a systemic risk that could stifle global AI hardware growth. This is compounded by the high geographic concentration of manufacturing in Asia.

Experience In-Depth, Real-Time Analysis

For just $200/year (not $200/hour). Stop wasting time with alternatives:

- Consultancies take weeks and cost thousands.

- ChatGPT and Perplexity lack depth.

- Googling wastes hours with scattered results.

Enki delivers fresh, evidence-based insights covering your market, your customers, and your competitors.

Trusted by Fortune 500 teams. Market-specific intelligence.

Explore Your Market →One-week free trial. Cancel anytime.

Related Articles

If you found this article helpful, you might also enjoy these related articles that dive deeper into similar topics and provide further insights.

- E-Methanol Market Analysis: Growth, Confidence, and Market Reality(2023-2025)

- Battery Storage Market Analysis: Growth, Confidence, and Market Reality(2023-2025)

- Climeworks 2025: DAC Market Analysis & Future Outlook

- Carbon Engineering & DAC Market Trends 2025: Analysis

- Climeworks- From Breakout Growth to Operational Crossroads

Erhan Eren

Ready to uncover market signals like these in your own clean tech niche?

Let Enki Research Assistant do the heavy lifting.

Whether you’re tracking hydrogen, fuel cells, CCUS, or next-gen batteries—Enki delivers tailored insights from global project data, fast.

Email erhan@enkiai.com for your one-week trial.