AI’s Grid Impact: Why Soaring Power Consumption Is a Systemic Risk for 2026

Industry Risks: AI Power Demand Escalates from IT Issue to Grid-Level Threat

The rapid escalation of AI power consumption has shifted from a manageable IT concern to a systemic risk for energy grids, as the predictable, incremental growth of 2021-2024 has been replaced by exponential and geographically concentrated demand in 2025.

- Between 2021 and 2024, data center power requirements grew predictably, with average rack power densities rising from 8 k W to 17 k W. This growth was accommodated by existing air-cooling technologies and allowed utilities to perform conventional, linear load forecasting.

- Starting in 2025, the commercial deployment of AI accelerators like the NVIDIA GB 200 Superchip, with a thermal design power of 2, 700 W, has driven rack densities beyond 50 k W and in some cases to over 100 k W. This creates concentrated load pockets that can overwhelm local substations and requires a fundamental shift to energy-intensive liquid cooling systems.

- This transition from linear to exponential demand is validated by the International Energy Agency. The agency’s projections show data center electricity consumption could more than double from 460 TWh in 2022 to over 1, 000 TWh by 2026, a demand surge driven almost entirely by AI adoption.

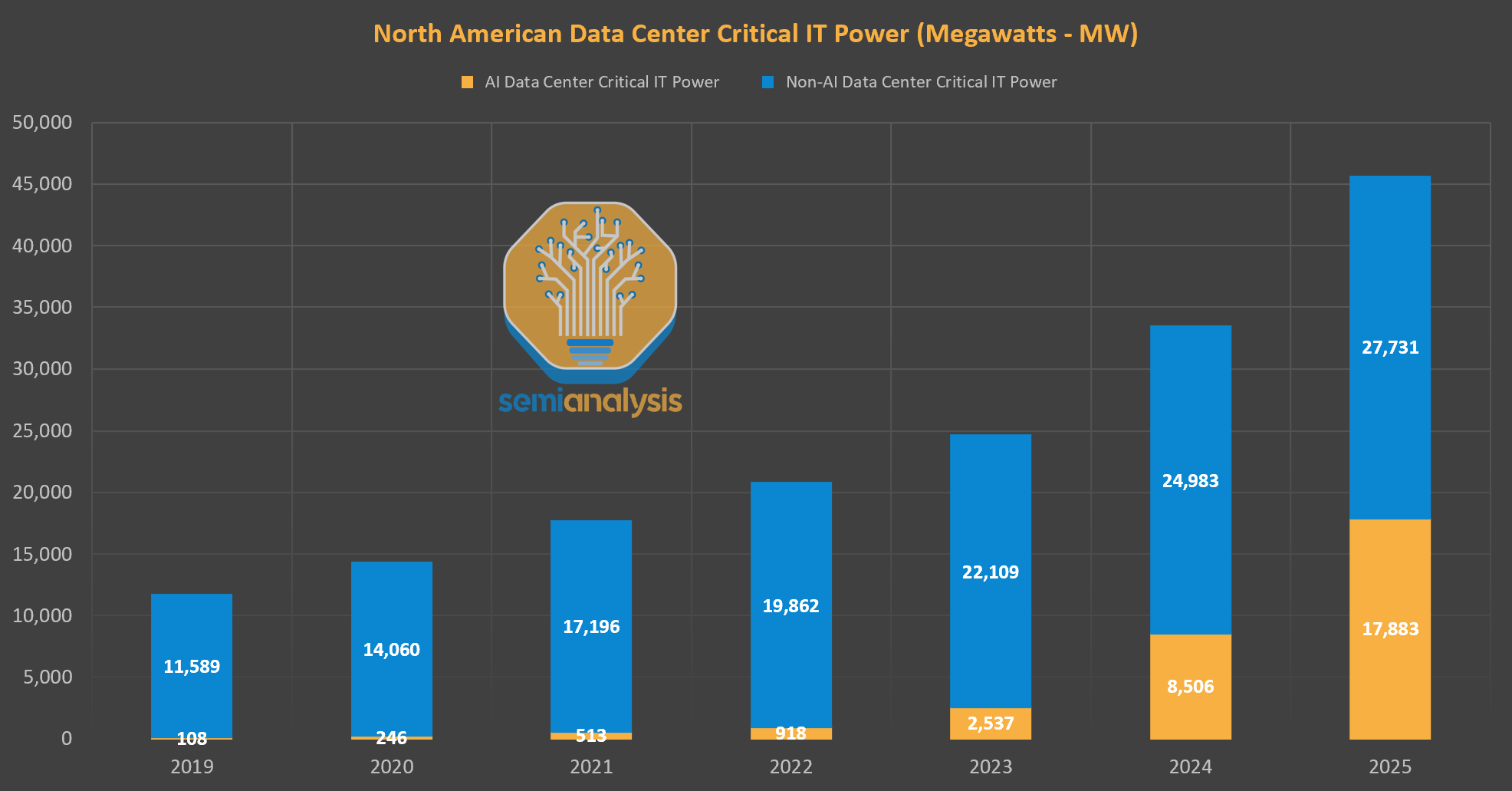

North American AI Power Demand Grows Exponentially

This chart illustrates the exponential growth in AI data center power demand projected through 2025, directly supporting the section’s argument that consumption has escalated from a manageable issue to a grid-level threat.

(Source: Epoch AI)

Geographic Hotspots: How AI’s Power Needs Are Straining U.S. Regional Grids

AI’s intense power requirements are creating geographically concentrated “demand hotspots, ” particularly in the United States, moving beyond the manageable growth seen between 2021-2024 to a state of acute regional grid strain from 2025 onwards.

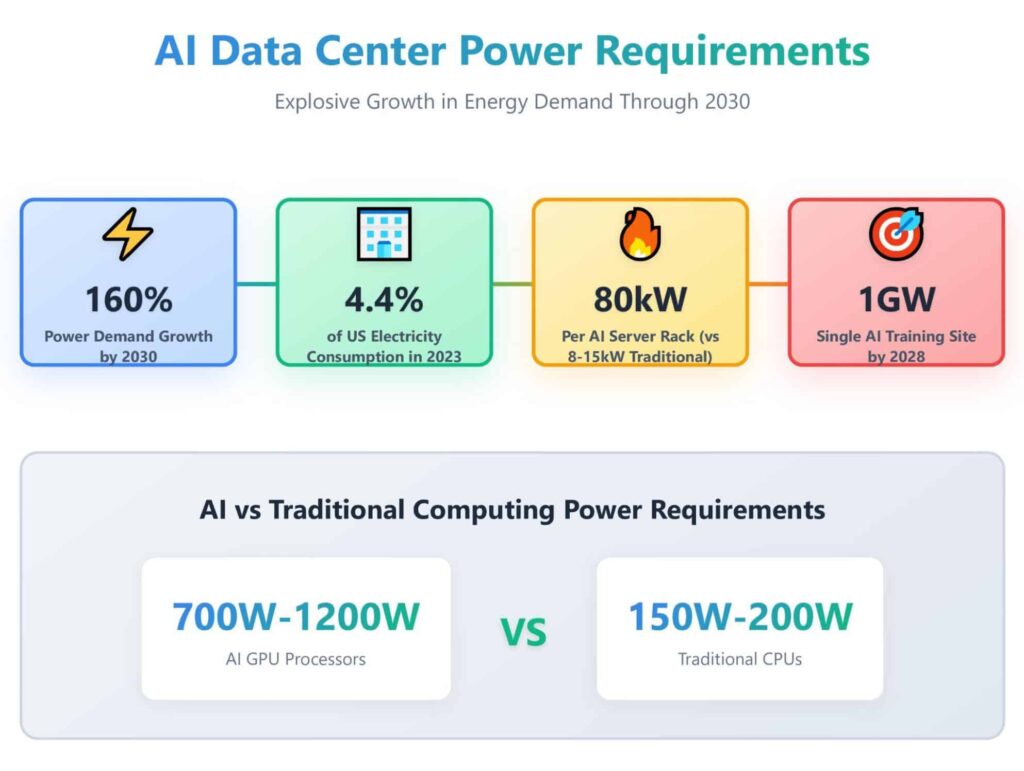

AI Power Demand Strains U.S. Electricity Grid

This infographic quantifies the strain on U.S. grids by highlighting that data centers consumed 4.4% of national electricity in 2023, a key data point cited directly in this section about geographic hotspots.

(Source: Hanwha Data Centers)

- The primary geographic focus of this power crunch is the United States, where data centers’ share of national electricity consumption grew from 3% in 2022 to approximately 4.4% by the end of 2023, reflecting the initial acceleration of AI deployments.

- Forecasts for the U.S. in 2030 show this concentration intensifying dramatically. Projections from the U.S. Department of Energy indicate data centers could consume between 6.7% and 12% of all national electricity, placing unprecedented stress on specific regional transmission networks.

- This regional strain is the leading edge of a global phenomenon. Goldman Sachs Research projects that total data center power demand will grow by 160% globally by 2030, confirming that the U.S. experience is a blueprint for infrastructure challenges that will emerge in other developed nations.

Technology Maturity: AI Hardware Outpaces Critical Power and Cooling Infrastructure

The technology enabling AI computation, particularly specialized GPUs, has reached commercial scale, but the supporting power and cooling infrastructure is critically lagging, creating a significant maturity gap that threatens deployment timelines and capital efficiency.

AI GPU Servers Drive Surging Power Consumption

This chart shows how power-intensive AI GPU servers are the primary driver of electricity consumption growth, visually representing the maturity gap between advanced hardware and lagging power infrastructure discussed in the section.

(Source: Institute of Energy and the Environment – Penn State)

- In the 2021-2024 period, commercially available hardware like the NVIDIA H 100 GPU, with its 700 W TDP, already pushed the thermal limits of traditional air-cooled data centers and was a primary driver in raising average rack densities toward 17 k W.

- From 2025, the introduction of next-generation hardware like the NVIDIA B 200 GPU (1, 000 W TDP) has rendered large-scale liquid cooling a mandatory, not optional, technology for high-density deployments. This validates that the underlying server technology has definitively outpaced the maturity of building-level thermal management systems.

- This systemic immaturity is most apparent at the utility scale. Projections that single AI training sites will require up to 1 GW of power by 2028 highlight a need for direct integration with power generation assets. This capability is not commercially mature, creating a major bottleneck between possessing AI hardware and being able to power it.

SWOT Analysis: Grid Constraints vs. AI Computational Strength

A SWOT analysis reveals that while the rapid advancement of AI hardware creates immense computational strength, it also exposes a critical weakness in unprepared energy infrastructure. This dynamic presents both a major opportunity for grid modernization and an immediate threat of stalled AI growth due to power constraints.

AI Training Compute Needs Show Exponential Growth

This chart illustrates the “computational strength” element of the SWOT analysis by showing the exponential increase in compute required for AI models, which underpins the growing strain on energy resources.

(Source: MIT FutureTech)

Table: SWOT Analysis for AI Workload Power Consumption

| SWOT Category | 2021 – 2023 | 2024 – 2025 | What Changed / Resolved / Validated |

|---|---|---|---|

| Strengths | Massively parallel GPUs like the NVIDIA H 100 (18, 432 CUDA cores) enabled training of increasingly complex AI models, establishing the hardware paradigm. | Next-generation chips like the NVIDIA Blackwell B 200 deliver major performance-per-watt gains for inference, with claims of up to 25 x less energy consumption than an H 100 for specific tasks. | The hardware’s computational strength is validated and continues to improve. However, overall system power demand is still rising due to exponential growth in AI model size and widespread adoption, an example of the Jevons paradox. |

| Weaknesses | High component power draw (e.g., H 100 at 700 W) began to stress traditional data center air cooling and pushed average rack power density to 17 k W. | Exploding rack densities (>50 k W) make legacy data center designs obsolete. The energy required for a single generative AI query is confirmed to be nearly 10 x that of a standard web search. | The power consumption per task and per rack grew non-linearly, exposing the fundamental weakness of existing data center power and cooling infrastructure, which was designed for lower, more predictable loads. |

| Opportunities | Early signals of rising data center power consumption drove initial niche investments in more efficient cooling and power distribution technologies. | The scale of AI’s power demand creates a multi-billion dollar business case for grid modernization, utility-scale energy storage, and new generation sources, attracting institutional investors like Goldman Sachs. | The problem of powering AI has transitioned from a niche technical challenge to a distinct, investable market for dedicated energy infrastructure, including Power Purchase Agreements and Small Modular Reactors. |

| Threats | Forecasts from bodies like the IEA began to show significant growth in data center energy use, but it was largely viewed as a manageable, long-term cost factor. | Projections from the U.S. Department of Energy show data centers could consume up to 12% of U.S. electricity by 2030, creating a near-term threat of grid instability and development moratoriums in key regions. | The threat has shifted from a financial operating cost to a physical constraint on the growth of the entire AI industry. The inability to secure power is now a primary barrier to entry and expansion. |

Scenario Modelling: Power Availability to Emerge as Primary AI Bottleneck

The primary constraint on AI expansion in the next 18 months will not be the supply of specialized chips, but the availability of reliable, grid-scale power. This will force a strategic realignment of data center development toward regions with available energy capacity, fundamentally altering site selection criteria.

Power Availability Is Top Bottleneck for AI

This chart directly supports the section’s scenario by identifying ‘Power constraints’ as the most significant bottleneck for scaling AI, validating the argument that power availability is the primary constraint.

(Source: Epoch AI)

- If this happens: If utilities and regulators cannot accelerate grid upgrades and new generation interconnection queues, the development of large-scale AI data centers will stall in historically popular regions like Northern Virginia and Silicon Valley.

- Watch this: Watch for data center developers announcing major projects in unconventional locations with surplus power capacity or forming direct partnerships with energy producers, such as utility-scale solar farms or next-generation nuclear developers.

- These signals are already happening:

- The projected 160% increase in data center power demand by 2030, cited by Goldman Sachs, is driving a “land rush” for sites located near high-voltage substations, making power access a higher priority than fiber connectivity.

- The extreme power density of new AI racks (over 50 k W) is forcing a non-negotiable shift to liquid cooling, which increases the total facility power and water footprint and further narrows the list of viable development sites to those with massive utility resources.

- Analyst consensus confirms that chip-level efficiency gains are being overwhelmed by the growth in AI model size and adoption, validating that this is an infrastructure-level problem that cannot be solved by software or silicon optimization alone.

Frequently Asked Questions

Why is AI’s power consumption suddenly considered a systemic risk?

The risk has escalated because demand is no longer growing predictably. As of 2025, the deployment of powerful new AI accelerators like the NVIDIA GB200 has caused an exponential, geographically concentrated surge in power needs. This is pushing rack power densities beyond 50 kW, a level that can overwhelm local substations and outpaces the capabilities of existing grid infrastructure, which was designed for slower, linear growth.

How much is data center power consumption expected to increase?

According to the International Energy Agency (IEA), global data center electricity consumption is projected to more than double, rising from 460 TWh in 2022 to over 1,000 TWh by 2026. In the U.S. specifically, the Department of Energy forecasts that data centers could consume as much as 12% of the nation’s total electricity by 2030.

Aren’t newer AI chips more energy-efficient? Why is overall power demand still rising?

Yes, next-generation chips like the NVIDIA Blackwell B200 offer significant performance-per-watt improvements for specific tasks. However, this is an example of the Jevons paradox. The efficiency gains are being overwhelmed by the exponential growth in the size of AI models and the massive global adoption of AI technology, causing the total system-level power demand to continue rising sharply.

What is the main bottleneck for AI expansion in the near future?

The article argues that the primary bottleneck for AI expansion over the next 18 months is shifting from the supply of specialized chips (like GPUs) to the availability of reliable, grid-scale power. The inability to secure power is becoming the main physical constraint and a primary barrier for companies looking to build or expand large-scale AI data centers.

How has the technology inside data centers changed to cause this problem?

The core issue is the dramatic increase in power density per rack. Between 2021-2024, GPUs like the NVIDIA H100 (700W) pushed rack densities to around 17 kW. From 2025, new hardware with much higher power draws (e.g., NVIDIA GB200 at 2,700W) is driving rack densities beyond 50 kW and sometimes over 100 kW. This renders traditional air-cooling obsolete and mandates a shift to more power-intensive liquid cooling systems, further increasing the facility’s total energy footprint.

Experience In-Depth, Real-Time Analysis

For just $200/year (not $200/hour). Stop wasting time with alternatives:

- Consultancies take weeks and cost thousands.

- ChatGPT and Perplexity lack depth.

- Googling wastes hours with scattered results.

Enki delivers fresh, evidence-based insights covering your market, your customers, and your competitors.

Trusted by Fortune 500 teams. Market-specific intelligence.

Explore Your Market →One-week free trial. Cancel anytime.

Related Articles

If you found this article helpful, you might also enjoy these related articles that dive deeper into similar topics and provide further insights.

- E-Methanol Market Analysis: Growth, Confidence, and Market Reality(2023-2025)

- Battery Storage Market Analysis: Growth, Confidence, and Market Reality(2023-2025)

- Carbon Engineering & DAC Market Trends 2025: Analysis

- Climeworks 2025: DAC Market Analysis & Future Outlook

- Bloom Energy SOFC 2025: Analysis of AI & Partnerships

Erhan Eren

Ready to uncover market signals like these in your own clean tech niche?

Let Enki Research Assistant do the heavy lifting.

Whether you’re tracking hydrogen, fuel cells, CCUS, or next-gen batteries—Enki delivers tailored insights from global project data, fast.

Email erhan@enkiai.com for your one-week trial.