Deep Seek’s Efficiency Paradox: Why AI Energy Demand Will Surge in 2026

Deep Seek Sparks Unprecedented AI Adoption, Fueling Data Center Energy Projects 2026

Deep Seek’s development of low-cost, high-performance artificial intelligence has democratized access to frontier models, accelerating adoption across diverse industries and driving a counter-intuitive surge in aggregate demand for power-intensive data center infrastructure.

- Before January 2025, the development of high-performance AI was constrained to a few tech giants due to prohibitive costs, with models like GPT-4 estimated to cost over $100 million to train. The announcement of Deep Seek’s models, trained for under $6 million, fundamentally altered this economic barrier.

- This cost disruption catalyzed rapid, cross-sector commercial adoption. By April 2025, partnerships were announced with automotive firms like BMW and BYD for in-car AI and with industrial giants like XCMG to slash complex modeling times from weeks to minutes, showcasing the technology’s broad applicability.

- While each AI instance is more efficient, the sheer volume of new applications is projected to fuel, not curb, infrastructure growth. Analysts from Mc Kinsey project that companies will invest $5.2 trillion into data centers by 2030 to meet this expanding demand, a clear signal for energy providers.

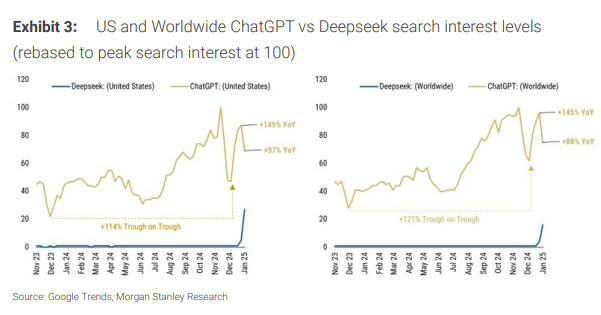

Deepseek Search Interest Explodes in January 2025

This chart visualizes the ‘unprecedented AI adoption’ by showing the massive, near-vertical spike in public search interest for DeepSeek immediately following its key announcement in January 2025.

(Source: Next Big Teng – Substack)

AI Infrastructure Investment Analysis: Hyperscaler Spending Surges Post-Deep Seek 2026

In a direct reaction to Deep Seek’s capital-efficient disruption, US technology leaders have massively increased AI infrastructure spending to create a competitive moat through scale, signaling a long-term, large-scale demand cycle for data center construction and energy supply.

- The “Deep Seek shock” of January 2025, where the company revealed its low training costs, triggered an immediate strategic pivot from competitors. This move validated that algorithmic efficiency alone was no longer a defensible position.

- The most direct response was the formation of a $100 billion joint venture in March 2025 between Open AI, Soft Bank, and Oracle. The explicit goal of this partnership is to massively scale up AI infrastructure investment within the United States.

- This specific venture is part of a broader industry trend of escalating capital expenditure. Projections show hyperscaler Cap Ex, driven almost entirely by AI, soaring to an unprecedented $315 billion by 2026, more than double the level in 2023.

Hyperscaler AI CapEx Projected to Hit $315B

This chart directly supports the section’s heading, quantifying the ‘massive increase in AI infrastructure spending’ with a projection of $315 billion in hyperscaler CapEx by 2026.

(Source: Next Big Teng – Substack)

Table: AI Infrastructure Investment and Strategy Post-Deep Seek

| Partner / Project | Time Frame | Details and Strategic Purpose | Source |

|---|---|---|---|

| Open AI, Soft Bank, Oracle | March 2025 | A $100 billion joint venture to build out AI infrastructure in the US. This is a strategic response to secure leadership by creating a capital-intensive barrier against efficient models from competitors like Deep Seek. | Construction Dive |

| Hyperscaler AI Cap Ex | 2023 – 2026 | Total capital expenditure from major tech firms is projected to reach $315 billion by 2026. This massive build-out is explicitly driven by the need for more AI compute, fueling demand for energy and data centers. | The AI infrastructure build-out debate just got deeper |

| Deep Seek Model Training | 2024 | The company trained its Deep Seek-V 3 model for approximately $5.58 million. This extreme capital efficiency served as the catalyst for the market disruption and the subsequent investment arms race. | South China Morning Post |

Deep Seek’s Strategic Partnerships with AI Infrastructure Providers Accelerate Energy Demand 2026

Deep Seek has executed a deliberate strategy to forge a global distribution network through partnerships with major cloud and infrastructure providers, making its efficient models ubiquitously accessible and thereby increasing the total computational load on the global data center grid.

- Beginning in 2024, Deep Seek established foundational partnerships with hardware and cloud leaders to ensure broad compatibility and distribution. Key integrations included Microsoft Azure in May 2024, NVIDIA NIM APIs in July 2024, and AMD GPU support in December 2024.

- In January 2025, a critical commercial agreement with Snowflake made Deep Seek-R 1 available on the Snowflake Cortex AI platform. This integration brought Deep Seek’s efficient models directly into a massive enterprise data ecosystem, enabling a new scale of computational workloads.

- These partnerships make it simple for millions of developers and enterprises to deploy AI, which multiplies the number of active AI workloads running simultaneously. This widespread activation of AI at the application layer directly translates into higher, more consistent energy consumption at the data center level.

Generative AI Value Chain Mapped Across Tiers

This chart provides essential context for the section’s discussion of partnerships, visually mapping the infrastructure layers (data centers, chips, electricity) where Deep Seek is being integrated.

(Source: IoT Analytics)

Table: Key Deep Seek Partnerships Driving AI Adoption and Infrastructure Load

| Partner / Project | Time Frame | Details and Strategic Purpose | Source |

|---|---|---|---|

| BMW | April 2025 | Integration of Deep Seek AI into vehicle models sold in China. This partnership drives real-world, at-the-edge AI inference demand, contributing to the overall compute ecosystem. | BMW Blog |

| Snowflake | January 2025 | Deep Seek-R 1 model made available on the Snowflake Cortex AI platform. This move puts powerful AI tools in the hands of enterprise users, directly increasing computational workloads on cloud infrastructure. | Snowflake |

| Microsoft Azure | May 2024 | Inclusion of Deep Seek models in the Azure AI Foundry. This partnership places Deep Seek’s technology within a major hyperscaler’s infrastructure, contributing to its growing energy footprint. | Microsoft Azure |

| NVIDIA | July 2024 | Models made available on NVIDIA NIM APIs, ensuring optimized performance on enterprise-grade GPUs. This provides a scalable, high-performance path for deploying energy-intensive AI applications. | NVIDIA |

Deep Seek’s Global Expansion Strategy: From China to Developing Nations in 2026

While originating in China, Deep Seek’s open-source, low-cost models have enabled its influence and adoption to expand rapidly across the globe, creating new, geographically dispersed hubs of AI-driven energy demand far beyond traditional technology centers.

- Between 2023 and 2024, Deep Seek’s primary geographic focus was on developing its models within China and securing partnerships with major US-based technology platforms like Microsoft and NVIDIA to ensure global compatibility from day one.

- The market disruption in 2025 caused a dual geographic effect. It catalyzed a massive, US-centric AI infrastructure investment boom, exemplified by the $100 billion Open AI-led venture aimed at consolidating domestic leadership.

- Simultaneously, Deep Seek’s accessible technology gained significant traction in new markets. A Microsoft report from January 2026 showed Deep Seek capturing major market shares in developing nations, including 56% in Belarus, 49% in Cuba, and 43% in Russia.

- This diversification of AI usage means that the corresponding demand for energy to power data centers is becoming a distributed global requirement. Energy executives must now plan for significant growth in regions previously not considered hotspots for high-tech power consumption.

AI Model Efficiency Hits Commercial Scale, Paradoxically Driving Energy Demand in 2026

The technology for creating capital-efficient, high-performance AI models has reached commercial maturity, but this unit-level efficiency is paradoxically accelerating aggregate energy consumption by making AI applications economically viable at an unprecedented and explosive scale.

- The period between 2023 and 2024 saw Deep Seek validate the technology with its V-series models. The release of Deep Seek-V 3 in late 2024, trained for only $5.58 million, proved that frontier-level performance was achievable without billion-dollar hardware budgets.

- The technology’s commercial maturity was confirmed in January 2025 when Deep Seek’s model demonstrated performance comparable to GPT-4 o, its free app briefly overtook Chat GPT in the US App Store, and it triggered a $600 billion single-day loss for Nvidia’s market value.

- This maturation has shifted the primary economic bottleneck from model training cost to inference capacity and the associated energy needed to run the models. Analysis from Bain & Company shows LLM inference costs are plummeting by up to 40 x annually, making it cheap to run AI applications.

- The result is an explosion in the volume of AI usage. The lower unit cost per query incentivizes more frequent and complex AI workloads, leading to a dramatic increase in the total, aggregated power draw from data centers. The anticipated launch of Deep Seek V 4 in February 2026 will likely continue this cycle.

SWOT Analysis: Deep Seek’s Impact on the AI and Energy Infrastructure Market 2026

A strategic analysis reveals that Deep Seek’s core strength in capital efficiency has created a significant market opportunity by democratizing AI, which has in turn exposed it to geopolitical threats and the challenge of competing against the massive infrastructure investments it provoked.

- Strengths: Unmatched capital efficiency in training frontier models, enabling a disruptive low-cost, open-source strategy.

- Weaknesses: A reliance on a single internal funding source (High-Flyer Capital) and exposure to geopolitical tensions as a leading Chinese AI firm.

- Opportunities: Mass adoption in previously priced-out markets and developing nations, vastly expanding the total addressable market for the entire AI infrastructure and energy value chain.

- Threats: A reactionary, capital-intensive infrastructure build-out by US competitors (e.g., the $100 B Open AI-led JV) and the looming risk of US government restrictions citing data security concerns.

DeepSeek Disrupts Value Chain, Creating Winners, Losers

This chart serves as a visual SWOT analysis, identifying the opportunities (winners) and threats (losers) across the market, which directly aligns with the section’s strategic summary.

(Source: IoT Analytics)

Table: SWOT Analysis of Deep Seek’s Market Position and Impact

| SWOT Category | 2021 – 2024 | 2025 – Today | What Changed / Validated |

|---|---|---|---|

| Strength | Theoretical advantage in efficient R&D, demonstrated with models like Deep Seek-V 2 saving 42.5% in training costs. | Proven capital efficiency with Deep Seek-V 3 trained for under $6 million, directly challenging Open AI’s $100 M+ cost structure. | The “efficiency advantage” was validated as a commercially disruptive force, not just a research outcome, triggering a market-wide re-evaluation of AI economics. |

| Weakness | Status as a new entrant with unproven commercial models, funded internally by parent company High-Flyer Capital. | Increased geopolitical scrutiny with US governments moving to restrict use of Deep Seek in February 2025, citing security concerns. | Success transformed Deep Seek from an unknown startup into a strategic national asset for China, attracting significant attention and risk from foreign governments. |

| Opportunity | Potential to democratize AI by open-sourcing models and offering low-cost APIs, but limited adoption. | Rapid commercial adoption (BMW, XCMG) and market penetration in developing nations (56% share in Belarus by 2026). | The opportunity to expand the AI market was validated. Deep Seek’s low-cost approach created demand in sectors and regions that were previously priced out. |

| Threat | Competition from established, well-funded US AI labs like Open AI and Anthropic. | Competitors responded with a massive infrastructure arms race, including the $100 billion Open AI-Soft Bank-Oracle JV, to re-establish a capital-intensive moat. | The competitive threat shifted from purely algorithmic to one of overwhelming infrastructure scale. Deep Seek’s efficiency forced competitors to double down on their primary advantage: access to capital. |

Future Outlook: AI Energy Consumption Set to Rise Despite Model Efficiency Gains in 2026

The critical forward-looking trend for the energy sector is that aggregate demand for AI-powering electricity will continue to accelerate, as algorithmic efficiency gains are far outpaced by the exponential growth in AI adoption and application complexity that Deep Seek has unleashed.

- The anticipated launch of Deep Seek V 4 in February 2026 is expected to offer superior performance and efficiency, which will further stimulate demand for AI applications and, consequently, the energy to run them.

- Massive capital investments from hyperscalers, including the $100 billion US-based joint venture and a projected $315 billion in total AI Cap Ex by 2026, are a clear leading indicator that industry leaders are preparing for a future of massive, energy-intensive AI workloads.

- Deep Seek’s continued expansion into developing nations confirms that AI-driven energy demand is becoming a geographically distributed, global phenomenon, requiring a strategic response from international energy providers.

- The debate sparked by Deep Seek has shifted from whether AI efficiency will curb energy use to how energy providers and infrastructure developers will meet the needs of a world where ubiquitous, low-cost AI workloads are the new normal.

AI Hardware Power Consumption Shows Exponential Growth

This chart perfectly supports the future outlook, visually confirming that ‘aggregate demand for…electricity will continue to accelerate’ by showing the exponential power draw of newer AI hardware.

(Source: LinkedIn)

Frequently Asked Questions

Isn’t it a contradiction that more efficient AI models will lead to higher energy consumption?

This is the core of the ‘efficiency paradox.’ While each individual AI query is more efficient and cheaper thanks to Deep Seek’s technology, the extremely low cost has dramatically increased access and adoption. This has caused an explosion in the sheer volume of AI applications across many industries (e.g., BMW, XCMG). The massive increase in the total number of AI workloads is projected to far outweigh the efficiency savings of each individual task, leading to a surge in aggregate energy demand from data centers.

What was the ‘Deep Seek shock’ and how did other major tech companies react?

The ‘Deep Seek shock’ refers to the January 2025 revelation that the company had trained a frontier AI model for under $6 million, a fraction of the $100+ million cost for competitors like OpenAI. This proved that algorithmic efficiency alone was no longer a competitive advantage. The immediate reaction from US tech leaders was to pivot to a strategy of overwhelming scale, exemplified by the March 2025 formation of a $100 billion joint venture between OpenAI, SoftBank, and Oracle specifically to build out massive AI infrastructure as a new competitive barrier.

What is the scale of the financial investment in AI infrastructure mentioned in the report?

The report highlights two key figures. First, a specific $100 billion joint venture was announced in March 2025 by OpenAI, SoftBank, and Oracle to build out US-based AI infrastructure. Second, this is part of a broader industry trend where total capital expenditure (CapEx) from major tech firms, driven by AI, is projected to reach $315 billion by 2026—more than double the spending level in 2023.

How is Deep Seek’s technology being adopted so widely?

Deep Seek’s rapid adoption is driven by a deliberate strategy of forming partnerships with major cloud and hardware providers. Key integrations with Microsoft Azure (May 2024), NVIDIA NIM APIs (July 2024), and the Snowflake Cortex AI platform (January 2025) have made its efficient models readily available to millions of developers and enterprise users, directly embedding them into existing ecosystems and accelerating their use.

Is the increased energy demand for AI concentrated only in the US?

No, the demand is becoming a global phenomenon. While the US is experiencing a massive infrastructure investment boom, Deep Seek’s accessible technology is also gaining significant market share in developing nations. A January 2026 report noted its high adoption rates in countries like Belarus (56% market share), Cuba (49%), and Russia (43%). This geographic diversification means that new, distributed hubs of AI-driven energy demand are emerging worldwide.

Experience In-Depth, Real-Time Analysis

For just $200/year (not $200/hour). Stop wasting time with alternatives:

- Consultancies take weeks and cost thousands.

- ChatGPT and Perplexity lack depth.

- Googling wastes hours with scattered results.

Enki delivers fresh, evidence-based insights covering your market, your customers, and your competitors.

Trusted by Fortune 500 teams. Market-specific intelligence.

Explore Your Market →One-week free trial. Cancel anytime.

Related Articles

If you found this article helpful, you might also enjoy these related articles that dive deeper into similar topics and provide further insights.

- E-Methanol Market Analysis: Growth, Confidence, and Market Reality(2023-2025)

- Battery Storage Market Analysis: Growth, Confidence, and Market Reality(2023-2025)

- Climeworks 2025: DAC Market Analysis & Future Outlook

- Carbon Engineering & DAC Market Trends 2025: Analysis

- Bloom Energy SOFC 2025: Analysis of AI & Partnerships

Erhan Eren

Ready to uncover market signals like these in your own clean tech niche?

Let Enki Research Assistant do the heavy lifting.

Whether you’re tracking hydrogen, fuel cells, CCUS, or next-gen batteries—Enki delivers tailored insights from global project data, fast.

Email erhan@enkiai.com for your one-week trial.