Grid-Aware AI 2025: How Resilient Models Thrive Under Energy & Compute Constraints

From Cloud to Edge: 2025 Adoption Shifts for AI in Energy Grid Management

The adoption of Artificial Intelligence in the energy sector has pivoted from cloud-dependent processing to resilient, edge-based systems, a shift driven by the urgent need for grid stability and real-time operational autonomy. This transition marks a fundamental change in how AI delivers value, prioritizing efficiency and low-latency decision-making directly at the infrastructure level.

- Between 2021 and 2024, AI applications for tasks like predictive maintenance primarily relied on sending sensor data to centralized cloud platforms for analysis. The strategy was to leverage the immense power of data centers, with optimization being a software-level concern to reduce cloud costs rather than a core architectural principle.

- Starting in 2025, the model inverted due to grid constraints and the high cost of data transmission. Commercial adoption now focuses on deploying AI at the grid’s edge for intelligent management. For example, Emerald AI demonstrated a 25% reduction in an AI factory’s power usage during peak grid stress, proving the viability of localized AI to alleviate system-wide strain.

- The current wave of adoption is validated by its direct economic and operational impact. Deloitte estimates that investing in AI for infrastructure resilience can cut disaster-related losses, including power outages, by 15%. This is because edge systems can instantly react to supply fluctuations and isolate faults without waiting for a signal from a remote data center.

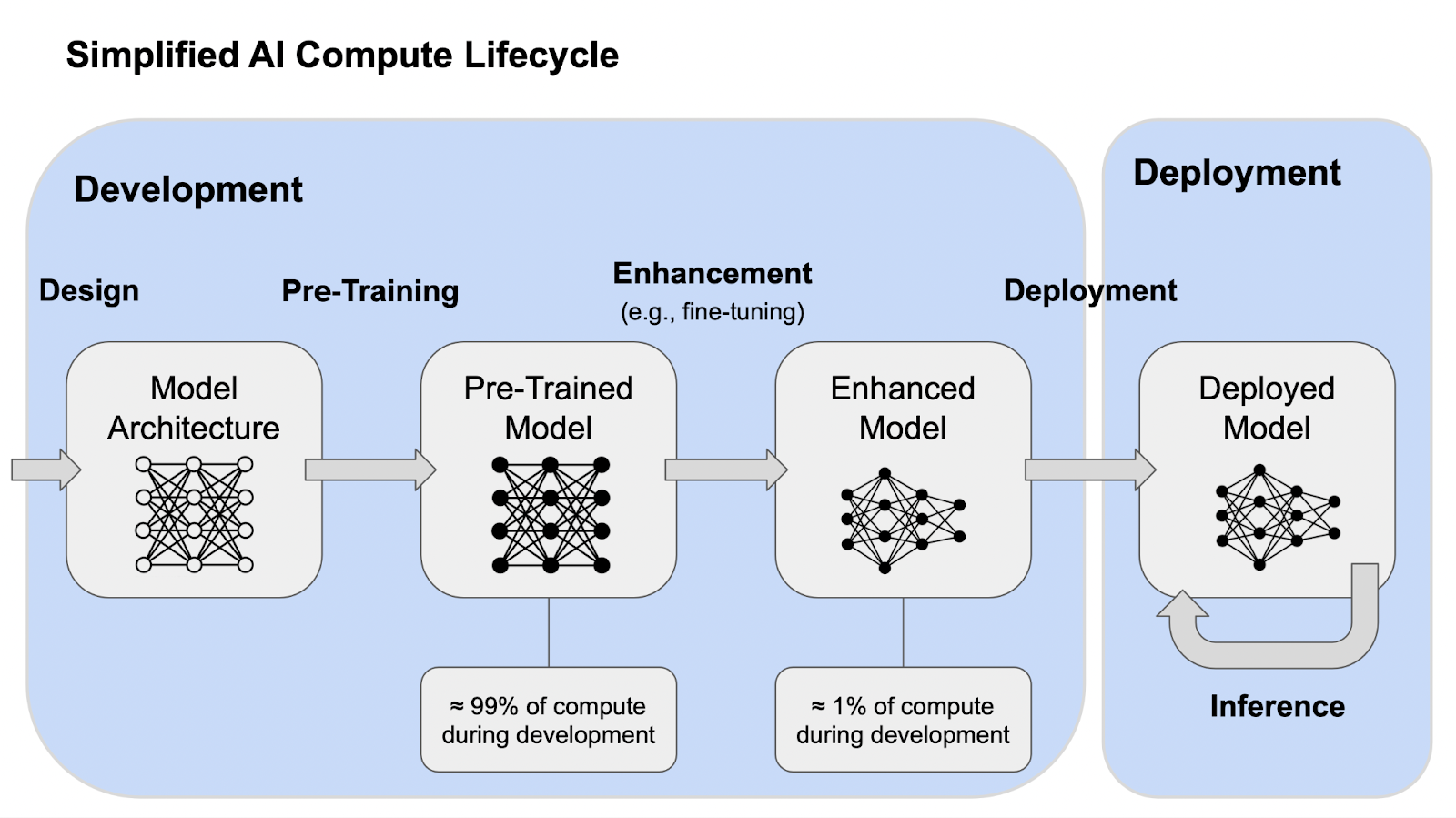

AI Lifecycle Separates Heavy Training From Lightweight Deployment

This chart illustrates the fundamental split between compute-intensive development and lightweight deployment, which is the core concept driving the cloud-to-edge shift described in the section.

(Source: Institute for Law & AI)

Geographic Hotspots: Where Resilient AI Architectures Are Deployed for Grid Stability

While North America historically led large-scale AI development in centralized data centers, the global imperative for grid modernization and Distributed Energy Resource (DER) integration is now driving the deployment of constraint-aware AI architectures worldwide. The focus has moved from where models are trained to where they can operate most effectively and resiliently.

North America and China Dominate AI Market

This chart provides geographic market data that directly supports the section’s discussion of geographic hotspots, highlighting North America’s dominance in the AI landscape.

(Source: ABI Research)

- From 2021 to 2024, North America dominated the AI landscape, characterized by the growth of large generative models housed in hyperscale data centers, as shown by ABI Research data. The primary geographic focus was on regions with dense concentrations of tech talent and cloud infrastructure.

- In 2025, the geographic distribution of advanced AI deployment began to diversify. The projected growth of China’s generative AI market signals a broader, global push for AI capabilities that necessitates diverse infrastructure strategies. For critical infrastructure, this means deploying intelligence locally.

- The need for grid resilience is a global problem, making the enabling technologies from companies like Arm (UK), NVIDIA (US), and various RISC-V proponents (global) critical in all major industrial markets. Research and development efforts, such as Huawei‘s work in Canada on hardware-efficient AI, further underscore the international nature of this architectural shift.

From R&D to Grid Reality: The Maturation of Constraint-Aware AI Architectures in 2025

The technology enabling resilient AI has progressed from niche research and software workarounds into a mature, commercially deployable ecosystem defined by the strategic co-design of hardware and software. This integration is what allows powerful AI to function within the tight power and processing budgets of edge devices on the energy grid.

The AI Computational Stack: Hardware and Software Layers

As the section discusses the maturation of AI through hardware-software co-design, this chart visually breaks down the foundational technology stack that enables this integration.

(Source: AI Now Institute)

- In the 2021-2024 period, the primary approach was software-based model optimization. Techniques like quantization, pruning, and knowledge distillation were used to shrink large, pre-trained models to run on existing hardware. This was a reactive measure to make cloud-trained AI fit on smaller devices.

- The period from 2025 to today is characterized by a proactive, systemic approach centered on hardware-software co-design. This strategy involves developing AI models and the silicon they run on in tandem to maximize efficiency. This ensures that the AI application, its model, and the chip are fully optimized for a specific task, such as real-time fault detection.

- The market’s maturity is evident in the product roadmaps of major silicon vendors. NVIDIA‘s upcoming Rubin platform emphasizes co-design for intelligence production at scale. Simultaneously, the open-standard RISC-V architecture allows companies to create highly specialized, low-power AI processors for specific grid applications, breaking the reliance on general-purpose GPUs.

- Emerging paradigms like analog and photonic computing, previously confined to research labs, are now demonstrated as viable future platforms for next-generation AI hardware, promising orders-of-magnitude improvements in energy efficiency.

SWOT Analysis: Resilient AI Architectures in Energy and Compute-Constrained Markets

The strategic imperative for AI in the energy sector has shifted from overcoming compute limitations as a technical hurdle to leveraging them as a commercial strength. This creates more resilient, secure, and economically viable systems that do not depend on massive, centralized data centers.

Key Risks and Concerns Fueling AI Distrust

This chart details key risks like data security and bias, providing a deeper look into the ‘Weaknesses’ and ‘Threats’ quadrants of the SWOT analysis introduced in this section.

(Source: Nature)

- Strengths: Constraint-aware AI provides unparalleled energy efficiency, operational autonomy, and low latency, which are critical for real-time grid management.

- Weaknesses: The ecosystem for custom silicon is still maturing, and aggressive model optimization can sometimes lead to minor trade-offs in accuracy that must be managed.

- Opportunities: The global market for grid modernization, DER integration, and predictive maintenance for remote energy assets represents a massive commercial opportunity.

- Threats: Supply chain vulnerabilities for specialized processors and the high initial capital cost of developing custom chips remain significant barriers for some market entrants.

Table: SWOT Analysis for Resilient AI Architectures

| SWOT Category | 2021 – 2024 | 2025 – Today | What Changed / Resolved / Validated |

|---|---|---|---|

| Strengths | Mature software optimization tools like quantization and pruning were available to reduce model size. Focus was on improving cloud AI efficiency. | High energy efficiency (TOPS/W), low latency, and operational autonomy for edge devices are the primary strengths. Local processing enhances data privacy and security. | The value proposition shifted from cost savings in the cloud to enabling resilient, real-time operations at the edge. Emerald AI‘s 25% power reduction during grid stress validated the direct infrastructure benefit. |

| Weaknesses | The hardware ecosystem was fragmented, with a heavy reliance on general-purpose chips. Deploying AI at the edge was complex and often required significant custom engineering. | The ecosystem for custom RISC-V chips is still developing, creating a talent and tooling gap. Over-aggressive optimization can potentially impact model accuracy if not carefully calibrated. | The weakness is shifting from a lack of hardware to the complexity of designing new, specialized hardware. Hardware-software co-design is powerful but requires new skill sets. |

| Opportunities | The primary opportunity was seen in consumer Io T devices and smartphones, where power and cost were key drivers for efficient AI. | The market for intelligent grid management, DER optimization, and autonomous systems in remote locations (pipelines, offshore platforms) is now a primary driver. | The opportunity grew from niche devices to critical national infrastructure. Deloitte’s forecast of a 15% reduction in disaster-related losses quantifies the massive economic potential in the energy and industrial sectors. |

| Threats | The dominance of cloud hyperscalers made it difficult for independent edge AI business models to compete for complex workloads. | Supply chain bottlenecks for high-end and specialized GPUs and accelerators. High non-recurring engineering (NRE) costs for custom silicon development can be prohibitive. | The threat evolved from market competition with the cloud to physical and economic constraints on hardware. The focus on brute-force scale created its own supply chain and energy consumption bottlenecks. |

2026 Outlook: If Grid Constraints Intensify, Watch for an Acceleration in Hardware-Software Co-Design

The primary strategic action for energy stakeholders is to prepare for a rapid acceleration in the deployment of purpose-built, co-designed AI systems as the twin costs of grid instability and AI energy consumption continue to rise.

- If this happens: Grid operators increasingly rely on demand-response programs that require large energy users, including data centers, to curtail power, as was piloted with Emerald AI.

- Watch this: An increase in partnerships between utility companies and AI hardware firms specializing in custom silicon. Also, watch for an uptick in job postings for RISC-V architects and hardware-aware machine learning engineers at major industrial and energy companies.

- These could be happening: Major utilities are already launching large-scale pilot projects for edge AI-based DER management. The technology announcements from established players like NVIDIA with its Rubin platform and venture funding for startups in novel paradigms like analog computing are early confirmations of this trajectory.

Frequently Asked Questions

Why is the energy sector moving AI from the cloud to the edge?

The shift is driven by the urgent need for grid stability, real-time operational autonomy, and the high cost of data transmission. Edge-based AI offers low-latency decision-making, allowing systems to instantly react to power fluctuations and isolate faults without waiting for signals from a remote data center, thus improving grid resilience.

What is ‘hardware-software co-design’ and why is it a key trend for 2025?

Hardware-software co-design is a strategy where AI models and the silicon chips they run on are developed in tandem to maximize efficiency. It is a key trend because it allows powerful AI to function within the tight power and processing budgets of edge devices, making it possible to deploy sophisticated AI for real-time grid management.

What are the main benefits of using resilient, edge-based AI for grid management?

The primary benefits are greater energy efficiency, operational autonomy, and low-latency responses critical for real-time control. According to the article, this leads to significant economic and operational impacts, such as a 25% reduction in power usage during grid stress (demonstrated by Emerald AI) and a potential 15% reduction in disaster-related losses like power outages.

Which technologies and companies are driving this architectural shift?

The shift is being driven by advances in specialized, low-power processors. Key players and technologies mentioned include Arm (UK), NVIDIA (US) with its Rubin platform, and proponents of the open-standard RISC-V architecture, which enables custom chip design. Research from firms like Huawei and emerging paradigms like analog computing are also contributing.

What is the biggest weakness or threat to the adoption of these resilient AI systems?

The main threats are supply chain vulnerabilities for specialized processors and the high initial capital cost (non-recurring engineering costs) of developing custom silicon chips, which can be a significant barrier for new market entrants. Additionally, the ecosystem for custom silicon design is still maturing, creating a potential talent gap.

Experience In-Depth, Real-Time Analysis

For just $200/year (not $200/hour). Stop wasting time with alternatives:

- Consultancies take weeks and cost thousands.

- ChatGPT and Perplexity lack depth.

- Googling wastes hours with scattered results.

Enki delivers fresh, evidence-based insights covering your market, your customers, and your competitors.

Trusted by Fortune 500 teams. Market-specific intelligence.

Explore Your Market →One-week free trial. Cancel anytime.

Related Articles

If you found this article helpful, you might also enjoy these related articles that dive deeper into similar topics and provide further insights.

- E-Methanol Market Analysis: Growth, Confidence, and Market Reality(2023-2025)

- Battery Storage Market Analysis: Growth, Confidence, and Market Reality(2023-2025)

- Climeworks 2025: DAC Market Analysis & Future Outlook

- Carbon Engineering & DAC Market Trends 2025: Analysis

- Google Clean Energy: 24/7 Carbon-Free Strategy 2025

Erhan Eren

Ready to uncover market signals like these in your own clean tech niche?

Let Enki Research Assistant do the heavy lifting.

Whether you’re tracking hydrogen, fuel cells, CCUS, or next-gen batteries—Enki delivers tailored insights from global project data, fast.

Email erhan@enkiai.com for your one-week trial.