AI Grid Strain 2026: Why Data Center Electricity Demand Threatens Global Power Stability

Industry Risks: AI Electricity Demand Shifts from Data Center Problem to Grid-Level Crisis

The exponential growth of Artificial Intelligence has created a structural dependency on massive, concentrated power sources, transitioning the core operational risk from internal data center management to external grid availability and stability. Before 2025, the industry focused on improving power usage effectiveness within the facility; today, the primary constraint is securing sufficient gigawatts from a power grid that was not designed for this type of load growth.

- Between 2021 and 2024, the primary concern was the computational intensity of AI, with an AI query consuming nearly ten times the electricity of a traditional search (2.9 Wh vs. 0.3 Wh). This drove internal rack power densities from an average of 8 k W to 17 k W, focusing solutions on localized cooling and power distribution.

- From January 2025 onward, the problem scaled to the grid level. Projections now forecast that AI data centers could account for up to 12% of total U.S. electricity demand by 2030. This has exposed the grid’s inability to supply new gigawatt-scale campuses, shifting the risk from hardware efficiency to the fundamental availability of generation capacity.

- The adoption of power-hungry GPUs, which require 5 to 10 times more power than traditional server chips, is no longer just a cooling challenge. It now creates large, inflexible blocks of baseload demand that strain grid operations and have led to wholesale electricity cost increases of up to 267% in areas with high data center concentration.

- The operational model has shifted from leasing colocation space to developing massive, power-first campuses. The need for constant, 24/7/365 power for AI operations necessitates firm backup from sources like natural gas or nuclear, revealing the inadequacy of intermittent renewables alone for these critical loads.

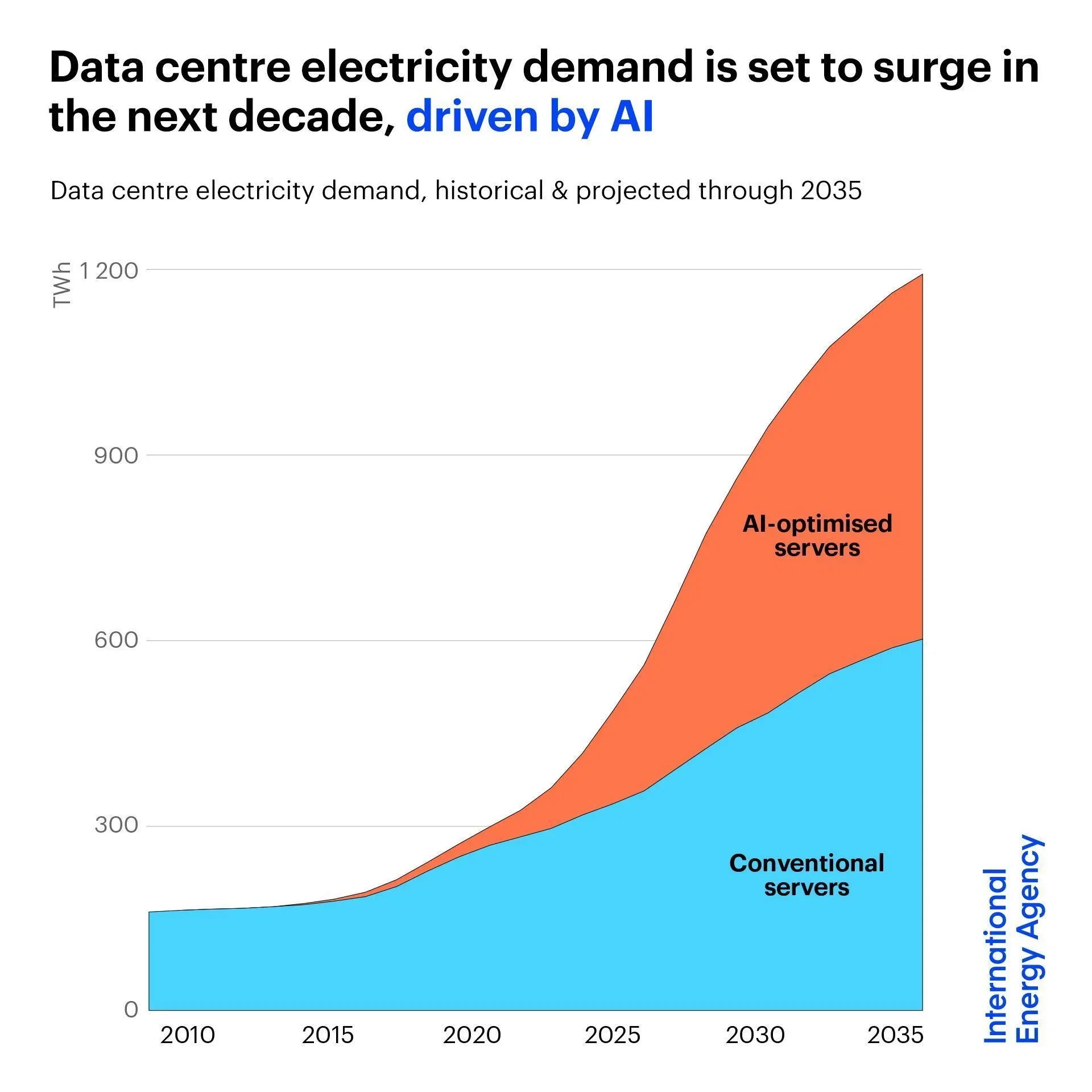

AI Drives Data Center Electricity Use Skyward

This forecast shows data center electricity demand could reach 1,200 TWh by 2035. The growth is driven almost entirely by power-intensive AI servers, illustrating the shift from an internal data center problem to a grid-level crisis.

(Source: Voronoi)

Investment Analysis: Trillion-Dollar Capital Inflow Targets AI Power Infrastructure Bottlenecks

The immense power requirements for AI have triggered a wave of multi-billion-dollar investments aimed directly at securing energy supply, moving capital from pure technology development into dedicated power generation and grid infrastructure. This financial pivot acknowledges that the primary bottleneck for AI expansion is no longer semiconductor manufacturing but the physical availability of electricity.

- Before 2025, investment focused primarily on chip design and AI model development. Since then, capital has been redirected to solve the energy problem, exemplified by Goldman Sachs’ estimate that $1 trillion will be spent on data centers, chips, and crucial grid upgrades.

- Major technology companies are now behaving like utility-scale energy developers. Microsoft’s partnership with Black Rock and MGX targets a potential $100 billion investment in data centers and their supporting power infrastructure, a clear signal that securing power is a core business strategy.

- Direct investment in generation assets is becoming standard practice. Meta’s decision to build a $10 billion AI-optimized data center in Louisiana and Google’s deal to use small modular reactors (SMRs) show that tech firms are vertically integrating into power production to de-risk their growth.

- The scale of spending is immense, with Mc Kinsey projecting a global need for $6.7 trillion in data center investment by 2030 to meet demand. The cost is driven by power infrastructure, with data center build costs now estimated at $10 million per megawatt (MW).

Table: Key Investments in AI Data Center and Power Infrastructure

| Company / Project | Time Frame | Details and Strategic Purpose | Source |

|---|---|---|---|

| Mc Kinsey Projection | By 2030 | Projects a global requirement of $6.7 trillion for data center construction to meet demand, driven significantly by power and cooling needs for AI. | The cost of compute: A $7 trillion race to scale data centers |

| Meta | Dec 2024 | Announced a $10 billion investment to build a new AI-optimized data center campus in Louisiana, demonstrating the massive capital expenditure required for a single project. | Meta Selects Northeast Louisiana as Site of $10 Billion … |

| Goldman Sachs Estimate | Jul 2024 | Estimates approximately $1 trillion will be spent in the coming years on data centers, semiconductors, and grid upgrades specifically to support AI expansion. | Goldman Sachs: $1 tn to be spent on AI data centers, chips … |

Partnership Analysis: Tech and Energy Sectors Converge to Secure AI’s Power Supply

Strategic partnerships between technology giants, energy developers, and capital providers have become the dominant mechanism for mitigating the risk of power shortfalls for AI data centers. These collaborations have shifted from simple power purchase agreements to deep, integrated ventures focused on building entirely new energy infrastructure co-located with data centers.

- The period from 2021 to 2024 was characterized by standard corporate procurement of renewable energy. Since 2025, the model has evolved into strategic alliances to build generation capacity at scale.

- The Black Rock, Microsoft, and MGX partnership launched in September 2024 is a defining example of this new model. Its goal to invest up to $100 billion in data centers and supporting power infrastructure signals that private capital, not just utility planning, will drive the build-out of energy for AI.

- Google’s partnership with Intersect Power and TPG Rise Climate, announced in December 2024, aims to co-locate data centers with renewable generation. The goal to catalyze $20 billion in investment underscores the strategy of building dedicated power supplies to bypass grid constraints.

- Technology providers are also forming alliances to address power delivery and reliability within the data center. The Ballard Power Systems and Vertiv partnership to develop hydrogen fuel cell backup power solutions indicates a move away from traditional diesel generators toward cleaner, more reliable on-site power.

Table: Strategic Partnerships for AI Power Infrastructure

| Partners / Project | Time Frame | Details and Strategic Purpose | Source |

|---|---|---|---|

| Google, Intersect Power, TPG Rise Climate | Dec 2024 | Formed a partnership to co-locate new data center capacity with renewable energy generation, targeting $20 billion in investment to ensure power supply. | Google Enters Strategic Partnership to Meet Power … |

| Google & SMR Provider | Oct 2024 | Signed a deal to use small modular reactors (SMRs) for its AI data centers, representing a strategic move to secure firm, carbon-free baseload power. | Google turns to nuclear to power AI data centres |

| Black Rock, Microsoft, MGX | Sep 2024 | Launched a partnership to invest in data centers and the required power infrastructure, with a potential scale of $100 billion. | Black Rock, Global Infrastructure Partners, Microsoft, and MGX … |

| Ballard Power Systems & Vertiv | Jun 2024 | Formed a strategic technology partnership to develop hydrogen fuel cell backup power, addressing the need for reliable on-site power generation. | Ballard and Vertiv announce strategic technology partnership … |

Geography: U.S. Power Grids Face Unprecedented Strain from AI Data Center Clusters

The geographic concentration of AI data center development, particularly in the United States, is creating localized grid crises that national-level forecasts understate. While global demand is rising, specific U.S. regions are experiencing demand shocks equivalent to adding a new city’s worth of load, forcing utilities to reconsider long-term capacity planning.

US Data Center Power Demand to Soar

Projected to grow at 13% annually, US data center power consumption will reach approximately 35 gigawatts by 2030. This surge is primarily driven by hyperscale data center clusters, directly supporting the section’s focus on unprecedented strain on U.S. grids.

(Source: Applied Economics Clinic)

- Between 2021 and 2024, data center growth was a known issue for utilities in hubs like Northern Virginia, but it was largely manageable through incremental upgrades. Pricing for capacity in this market increased by 42% year-over-year, signaling rising supply-demand tension.

- Since 2025, the scale of demand has become a systemic threat. Deloitte projects that power demand from U.S. AI data centers could reach 123 gigawatts (GW) by 2035, a thirtyfold increase. This growth is not evenly distributed, creating extreme pressure on regional grids.

- The U.S. is the epicenter of this demand surge, with data centers projected to consume between 8.6% and 12% of total national electricity by the end of the decade, up from just 4.4% in 2023. This rapid increase has direct consequences for all consumers, as wholesale electricity costs have soared in data-center-heavy regions.

- Globally, the International Energy Agency (IEA) projects data center demand will more than double to nearly 950 TWh by 2030. However, the acute infrastructure challenges are most visible in the U.S., where the lack of transmission and generation capacity is becoming the primary barrier to AI expansion.

Technology Maturity: AI Power Solutions Shift from R&D to Commercial Deployment

The technology for powering AI data centers is rapidly maturing from a reliance on conventional grid connections to the commercial deployment of dedicated, alternative power sources. The extreme power density of AI hardware has rendered traditional cooling and power delivery systems obsolete, forcing the adoption of next-generation infrastructure at a commercial scale.

Servers and Cooling Dominate Data Center Power

This breakdown shows servers (43%) and cooling systems (43%) are the two largest electricity consumers in a data center. This directly illustrates why the obsolescence of traditional cooling and power delivery is a critical technology challenge for the AI era.

(Source: AI with Armand)

- In the 2021-2024 period, the industry focused on improving existing technologies, such as enhancing air cooling and optimizing server efficiency. The primary challenge was managing heat within the rack, which accounts for up to 40% of a data center’s electricity use.

- Since 2025, the technology has shifted to address gigawatt-scale power needs. The move toward liquid cooling is now a commercial necessity, not an experimental technique, to handle rack densities projected to hit 30 k W.

- The most significant technological shift is in power generation. Google’s move to procure power from SMRs and partnerships to build dedicated renewable facilities signal that reliance on the public grid is no longer a viable long-term strategy for hyperscalers. These are no longer pilot projects but commercial-scale deployments.

- Backup power technology is also maturing. The partnership between Ballard and Vertiv to commercialize hydrogen fuel cells for data centers demonstrates a market-driven effort to find reliable, clean, on-site power solutions that can support critical AI workloads without depending on a strained grid.

SWOT Analysis: Grid Constraints Define AI’s Growth Trajectory

The strategic outlook for AI is now inextricably linked to the capabilities of global energy infrastructure, with grid availability and power generation acting as both a primary enabler and a critical threat. The industry’s ability to navigate these energy constraints will determine the pace and scale of AI adoption.

Data Centers’ Share of New Power Demand

This chart provides strategic context by showing data centers as a relatively small portion of total global electricity demand growth by 2030. This perspective is crucial for a balanced SWOT analysis of the industry’s grid-level challenges and opportunities.

(Source: Hannah Ritchie | Substack)

- Strengths: Unprecedented economic momentum and capital investment are being directed toward solving the power challenge.

- Weaknesses: The fundamental inefficiency of current AI hardware creates an unsustainable demand growth curve.

- Opportunities: AI’s power needs are forcing a paradigm shift in energy, accelerating the deployment of advanced solutions like SMRs and large-scale energy storage.

- Threats: The failure to build out power infrastructure fast enough will create a hard ceiling on AI growth and lead to energy scarcity for other sectors.

Table: SWOT Analysis for AI Data Center Power Demand

| SWOT Category | 2021 – 2024 | 2025 – Today | What Changed / Resolved / Validated |

|---|---|---|---|

| Strengths | High computational performance of GPUs for AI workloads was the key strength. Investment was focused on chip and model R&D. | Massive capital influx ($1 trillion+) from entities like Black Rock and Goldman Sachs is now aimed at building supporting power infrastructure. | The industry validated that financial capital is available to solve the problem, shifting the bottleneck from funding to physical execution and regulatory approval for new power projects. |

| Weaknesses | High power consumption (up to 40% for cooling) and rising power densities (from 8 k W to 17 k W per rack) were identified as operational inefficiencies. | The weakness has scaled to a systemic level. The need for constant, gigawatt-scale baseload power exposes the limitations of grids built for traditional industrial and residential loads. | It was validated that on-site efficiency gains are insufficient. The core weakness is now the structural mismatch between AI’s power profile and the existing grid’s capabilities. |

| Opportunities | The need for cleaner energy led to corporate power purchase agreements (PPAs) for renewable energy. | AI’s demand is now large enough to underwrite new energy technologies, including SMRs (Google’s deal) and large-scale hydrogen fuel cells (Ballard/Vertiv). | The opportunity shifted from simply buying green energy to actively funding and accelerating the commercialization of next-generation firm power technologies. |

| Threats | The threat was rising operational costs (Op Ex) due to high electricity prices and potential for localized power shortages in data center alleys. | The threat is now systemic grid instability and the potential for AI growth to be physically capped by a lack of generation capacity, as forecast by Gartner. Wholesale electricity prices have spiked 267% in some areas. | The threat was validated as being real and immediate. It is no longer a future risk but a current constraint impacting project timelines, costs, and regional economic stability. |

Scenario Modeling: AI Growth Hinges on Accelerated Energy Infrastructure Deployment

The single most critical factor determining AI’s growth trajectory through 2030 is the speed at which new, reliable power generation and transmission can be brought online. If infrastructure deployment lags behind the construction of new data centers, the industry will face significant fragmentation and delays, with access to power becoming the key competitive differentiator.

Scenario Modeling Future Data Center Demand

Illustrating a range of possible futures, this IEA chart models both slow and fast growth scenarios for data center electricity demand. This directly matches the section’s focus on scenario modeling, where growth hinges on infrastructure deployment speed.

(Source: Hannah Ritchie | Substack)

- If this happens: Permitting and construction timelines for new power plants and transmission lines continue to average 5-10 years.

- Watch this: An increase in public announcements from companies like Amazon, Microsoft, and Meta delaying or canceling new data center projects specifically because of a lack of available power from local utilities.

- This could be happening: AI compute capacity becomes a geographically constrained resource, leading to a “power arbitrage” where workloads are shifted to regions with available energy surpluses. We would see an acceleration of direct investments by tech firms into power generation assets, effectively creating private, vertically integrated utilities to bypass public grid limitations. The recent multi-billion-dollar energy infrastructure partnerships are the first signal of this trend gaining traction.

Frequently Asked Questions

Why has AI’s electricity demand become a grid-level crisis?

The crisis stems from a shift in scale. Before 2025, the focus was on managing power consumption within individual data centers. Today, the sheer number of new, power-hungry AI campuses creates a massive, concentrated demand for gigawatts of electricity that the existing power grid was not built to handle. This has transformed the issue from an internal operational challenge to a systemic threat to grid stability.

How are major tech companies responding to this power shortage?

Tech giants are now behaving like energy developers. Instead of just buying power, they are making multi-billion-dollar investments to build their own dedicated power infrastructure. Examples include Microsoft’s $100 billion partnership with BlackRock to build data centers and supporting power, and Google’s deal to use small modular reactors (SMRs) to secure a reliable, long-term energy supply.

What is the biggest bottleneck currently limiting the growth of AI?

The primary bottleneck for AI expansion has shifted from semiconductor manufacturing to the physical availability of electricity. The article states that the immense capital flowing into the sector is now targeting power generation and grid infrastructure, acknowledging that a lack of power is the main constraint holding back further AI development and deployment.

Are renewable energy sources like wind and solar enough to power these new AI data centers?

According to the analysis, intermittent renewables alone are not sufficient. AI operations require constant, 24/7/365 power, creating a baseload demand that sources like wind and solar cannot reliably meet on their own. This is why companies are pursuing ‘firm’ power sources like natural gas and nuclear, and developing on-site backup solutions like hydrogen fuel cells, to ensure uninterrupted operation.

What is the estimated financial cost of building the infrastructure to power AI?

The costs are in the trillions. The article cites a McKinsey projection for a global need of $6.7 trillion in data center investment by 2030, much of it for power infrastructure. Goldman Sachs also estimates that around $1 trillion will be spent on data centers, chips, and crucial grid upgrades to support the expansion of AI.

Experience In-Depth, Real-Time Analysis

For just $200/year (not $200/hour). Stop wasting time with alternatives:

- Consultancies take weeks and cost thousands.

- ChatGPT and Perplexity lack depth.

- Googling wastes hours with scattered results.

Enki delivers fresh, evidence-based insights covering your market, your customers, and your competitors.

Trusted by Fortune 500 teams. Market-specific intelligence.

Explore Your Market →One-week free trial. Cancel anytime.

Related Articles

If you found this article helpful, you might also enjoy these related articles that dive deeper into similar topics and provide further insights.

- E-Methanol Market Analysis: Growth, Confidence, and Market Reality(2023-2025)

- Battery Storage Market Analysis: Growth, Confidence, and Market Reality(2023-2025)

- Carbon Engineering & DAC Market Trends 2025: Analysis

- Climeworks 2025: DAC Market Analysis & Future Outlook

- Climeworks- From Breakout Growth to Operational Crossroads

Erhan Eren

Ready to uncover market signals like these in your own clean tech niche?

Let Enki Research Assistant do the heavy lifting.

Whether you’re tracking hydrogen, fuel cells, CCUS, or next-gen batteries—Enki delivers tailored insights from global project data, fast.

Email erhan@enkiai.com for your one-week trial.