AI Infrastructure 2026: How Meta and NVIDIA’s Multi-Billion Dollar Deal Exposes Critical Growth Constraints

2026 AI Infrastructure Race: How Meta’s NVIDIA Chip Strategy Shifted from Procurement to Full-Stack Integration

The strategic relationship between Meta and NVIDIA has evolved from large-scale GPU procurement between 2021 and 2024 to a deep, multi-generational, full-stack co-design partnership in 2025 and 2026, signaling that securing integrated compute systems, not just individual chips, is now the critical factor for AI leadership.

- During the 2021-2024 period, Meta‘s primary objective was the mass acquisition of powerful individual GPUs to fuel its AI ambitions. The company publicly targeted an infrastructure equivalent to 600, 000 NVIDIA H 100 GPUs by the end of 2024 and built foundational systems like the AI Research Super Cluster (RSC) with 16, 000 NVIDIA A 100 GPUs.

- A strategic shift occurred in early 2026 with the announcement of a multi-billion dollar, multi-year deal that expanded beyond simple GPU acquisition. Meta committed to deploying not only NVIDIA‘s future Blackwell and Rubin GPUs but also its Arm-based Grace and Vera CPUs and Spectrum-X Ethernet networking fabric.

- This transition from buying discrete components to co-designing and deploying a fully integrated hardware and software stack is a strategic move to maximize performance at an unprecedented scale. It aims to eliminate the bottlenecks that can arise from combining components from multiple vendors, a crucial consideration for training models like Llama 4 on over 100, 000 GPUs.

- The partnership’s scope now includes secure, user-facing applications, demonstrated by Meta‘s adoption of NVIDIA Confidential Computing for its Whats App platform. This shows the relationship has matured from supporting internal research to enabling commercial product features for its 3.58 billion users.

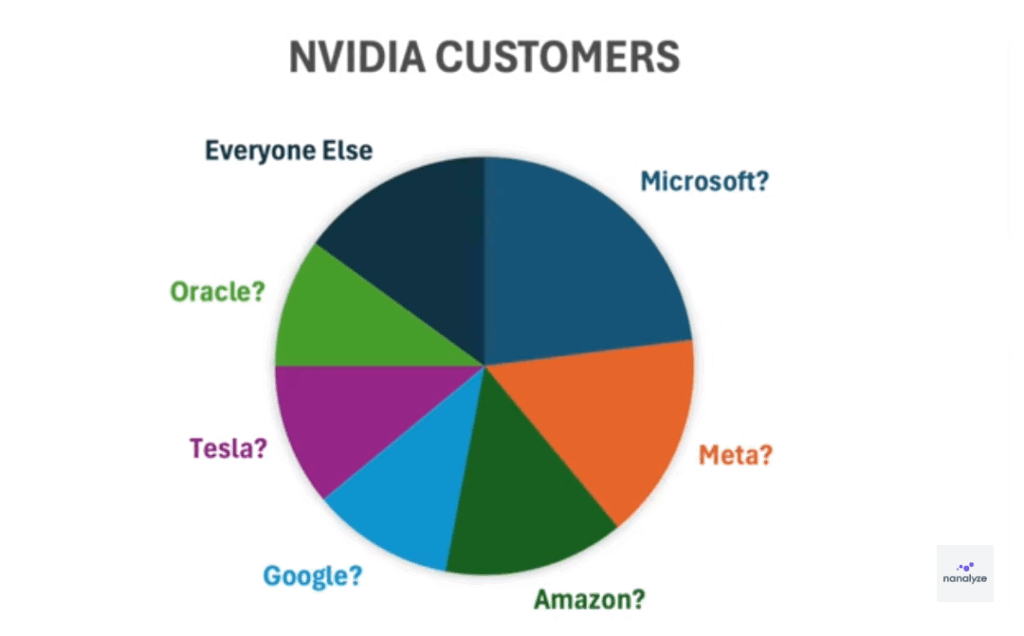

Meta Represents a Major NVIDIA Customer Segment

This chart illustrates Meta’s position as a major NVIDIA customer, which is the foundational procurement relationship that evolved into the full-stack partnership discussed in the section.

(Source: Nanalyze)

Meta’s Multi-Billion Dollar AI Bet: Analyzing the Capital Expenditure Fueling NVIDIA’s Dominance

Meta‘s capital expenditure on AI infrastructure has escalated dramatically, with a forecasted spend of $115 billion to $135 billion for 2026 alone, which solidifies its dependency on NVIDIA and illustrates the immense financial barrier to entry for competing at the frontier of AI development.

- Meta‘s planned capital expenditures show a steep, accelerating curve, rising from a $35 billion to $40 billion forecast in 2024 to a range of $60 billion to $65 billion in 2025. The subsequent jump to a $115 billion to $135 billion range for 2026 signals an all-in commitment to building out AI compute capacity.

- The multi-year agreement with NVIDIA announced in 2026 is a core component of this spending, with analysts estimating its value could reach up to $50 billion. This single deal positions Meta as one of NVIDIA‘s most significant customers, accounting for an estimated 9% of its revenue.

- This level of investment is not occurring in a vacuum, as key competitors are also making massive financial commitments. Open AI, for instance, announced a strategic partnership with NVIDIA to deploy at least 10 gigawatts (GW) of systems, potentially supported by a NVIDIA investment of up to $100 billion, underscoring the industry-wide scale of the AI infrastructure arms race.

Table: Major AI Infrastructure Investments

| Partner / Project | Time Frame | Details and Strategic Purpose | Source |

|---|---|---|---|

| Meta Platforms | 2026 | Planned capital expenditure of $115 B – $135 B, with the majority for AI infrastructure including millions of NVIDIA GPUs and CPUs. The goal is to power its long-term “superintelligence” roadmap. | 24/7 Wall St. |

| Meta Platforms / NVIDIA | 2026 | A multiyear, multigenerational partnership valued at up to $50 B for NVIDIA‘s full stack of GPUs (Blackwell/Rubin), CPUs (Grace/Vera), and networking. The deal solidifies a deep co-design relationship. | Reuters |

| Meta Platforms | 2025 | Planned capital expenditure of $60 B – $65 B. Key goals include bringing approximately 1 GW of compute capacity online and ending the year with over 1.3 million GPUs. | Reddit / Hardware |

| Open AI / NVIDIA | Announced Sep 2025 | Strategic partnership to deploy at least 10 GW of NVIDIA systems. The deal is backed by a potential NVIDIA investment of up to $100 B in Open AI to support the build-out. | NVIDIA News |

| Meta Platforms | 2024 | Forecasted capital expenditure of $35 B – $40 B, primarily for AI infrastructure. The goal was to acquire hardware equivalent to 600, 000 H 100 GPUs, including a direct purchase of 350, 000 units. | The Street |

The NVIDIA-Meta Alliance: A Strategic Partnership Reshaping the AI Chip Market

The partnership between Meta and NVIDIA has deepened from a customer-supplier relationship into a symbiotic co-design alliance, creating a powerful ecosystem that locks in Meta‘s infrastructure roadmap and simultaneously solidifies NVIDIA‘s market dominance against rivals AMD and Intel.

NVIDIA’s Profit Margin Overtakes Meta’s

This chart shows NVIDIA’s operating margin surging past Meta’s, a direct financial result of its market dominance that has been solidified by strategic alliances like the one with Meta.

(Source: The Motley Fool)

- The 2026 deal is explicitly defined as a “multiyear, multigenerational strategic partnership” that extends beyond procurement to active co-design. This ensures that future NVIDIA architectures will be tightly optimized for Meta‘s specific AI workloads, creating a significant performance advantage.

- A critical element of the deepened alliance is Meta‘s commitment to a “significant deployment” of NVIDIA‘s Grace and future Vera CPUs. This marks the first large-scale adoption of NVIDIA‘s CPU-only systems by a hyperscaler, opening a new competitive front against Intel and AMD‘s long-standing dominance in the data center CPU market.

- The market’s reaction underscored the strategic implications of this integrated approach. Following the announcement, shares of NVIDIA‘s key rival, AMD, declined, reflecting investor concern that the deep partnership reduces the likelihood of Meta diversifying its accelerator and CPU supply chain in the near future.

Table: Key Partnership Milestones

| Partner / Project | Time Frame | Details and Strategic Purpose | Source |

|---|---|---|---|

| Meta & NVIDIA | Feb 2026 | Announced a multi-billion dollar partnership for NVIDIA‘s full stack of GPUs, CPUs, and networking. This created a deep co-design relationship to build Meta‘s next-generation AI infrastructure. | NVIDIA |

| Meta & NVIDIA | Mar 2024 | Meta revealed its two 24, 576-GPU clusters built with NVIDIA H 100 s and Quantum 2 Infini Band fabric. This partnership provided the massive scale needed for training foundation models like Llama 3. | SDx Central |

| Meta & NVIDIA | Oct 2022 | Meta unveiled its Grand Teton AI platform, which was co-designed with NVIDIA to incorporate its new Hopper architecture GPUs, marking a step towards deeper hardware integration. | NVIDIA Blogs |

| Meta & NVIDIA | Jan 2022 | Meta introduced its AI Research Super Cluster (RSC), which was built with 16, 000 NVIDIA A 100 GPUs. This initial project established the foundation of their large-scale AI collaboration. | AI at Meta |

Global AI Build-Out: How Taiwan’s Manufacturing Dominance Underpins the Meta-NVIDIA Partnership

While Meta‘s AI data center deployments are global, the physical production of the server hardware underpinning this expansion remains highly concentrated in Taiwan, whose Original Equipment Manufacturers (OEMs) represent a critical enabler and potential supply chain bottleneck for the entire industry.

Taiwan’s AI Server Revenue to Surge in 2026

This chart’s projection of soaring revenue for Taiwan’s server OEMs directly supports the section’s focus on the country’s critical manufacturing role in the global AI hardware build-out.

(Source: Common Sense Daily – Substack)

- Between 2021 and 2024, the focus was on constructing massive data centers, primarily in the United States, to house the growing arsenal of GPUs. The geographic challenge was centered on site selection, power, and cooling for these large-scale facilities.

- Data from 2025 and 2026 has brought the manufacturing supply chain into sharp focus, revealing a heavy reliance on a specific region. Projections show that Taiwan’s ‘Big 6’ AI server OEMs are expected to achieve explosive revenue growth, reaching approximately 1.6 trillion TWD per month by the end of 2026.

- This geographic concentration of manufacturing capacity creates a significant system-level risk. A disruption in this region could severely impact the global build-out of AI infrastructure, affecting not only Meta and NVIDIA but all major technology companies reliant on this hardware ecosystem.

- For Meta, the projected growth of Taiwanese OEMs is a positive signal confirming that the manufacturing capacity required for its aggressive expansion plans exists. For NVIDIA, it validates the persistent, high-volume demand for its products and reinforces the strategic importance of its relationships within this vital manufacturing hub.

From H 100 to Full-Stack Systems: The Maturation of NVIDIA’s AI Platform in the Meta Partnership

The technology at the heart of the Meta and NVIDIA relationship has matured from a focus on acquiring best-in-class discrete GPUs (2021-2024) to the commercial deployment of a fully integrated, full-stack compute platform (2025-2026), validating NVIDIA‘s system-level strategy as essential for hyperscale performance.

Meta Led Procurement of NVIDIA H100 GPUs in 2023

This chart quantifies Meta’s massive acquisition of NVIDIA H100 GPUs, perfectly illustrating the initial procurement-focused phase of the technology partnership described in the text.

(Source: Analytics India Magazine)

- In the 2021-2024 timeframe, the central technology was the GPU itself, specifically the NVIDIA A 100 and its successor, the H 100. Meta‘s technological objective was to accumulate these individual accelerators at a massive scale to power its research and initial model training.

- By 2026, the partnership’s technological scope expanded to a complete, system-level solution. The agreement to deploy Blackwell/Rubin GPUs alongside Grace/Vera CPUs and Spectrum-X networking represents the commercial maturation of NVIDIA‘s full-stack vision.

- Meta‘s decision to execute the first large-scale deployment of NVIDIA‘s Grace-only CPU systems serves as a major validation point. It signals that NVIDIA‘s CPU technology has moved from a new market entrant to a viable, high-performance option for one of the world’s most demanding data center environments.

- Despite this deep integration, Meta continues to advance its own custom silicon, the Meta Training and Inference Accelerator (MTIA). This parallel strategy to develop an in-house alternative, even if it is currently focused on different workloads, shows that while NVIDIA‘s platform is dominant, the long-term goal for hyperscalers is to mitigate vendor dependency.

SWOT Analysis: Meta and NVIDIA’s Symbiotic AI Infrastructure Strategy

The analysis of the Meta and NVIDIA partnership reveals a powerful alliance built on market-leading technology and massive financial scale, yet it also exposes a critical mutual dependency and significant threats from both established competitors and the long-term drive for silicon independence.

- The partnership’s primary strength has evolved from procurement power to a deeply integrated, co-designed full-stack platform that is difficult for competitors to replicate.

- Its core weakness remains a profound and increasingly expensive dependency on a single supplier for frontier AI capabilities, a risk Meta is attempting to manage with its in-house chip development.

- The greatest opportunity lies in leveraging this integrated stack to achieve breakthroughs in AI and expand into new markets, such as NVIDIA‘s entry into the data center CPU space.

- The most significant threat is the combination of intense competition from rivals like AMD and the strategic imperative for hyperscalers to diversify their supply chains, which could erode NVIDIA‘s dominance over time.

Table: SWOT Analysis for Meta and NVIDIA

| SWOT Category | 2021 – 2024 | 2025 – 2026 | What Changed / Resolved / Validated |

|---|---|---|---|

| Strengths | Massive procurement of leading GPUs (A 100, H 100) to build large-scale clusters like the AI Research Super Cluster. | Deep, multi-year, full-stack partnership securing supply of next-gen GPUs (Blackwell/Rubin), CPUs (Grace/Vera), and networking. | The strategy shifted from simple procurement scale to a deeply integrated, co-designed platform, creating a stronger competitive moat. |

| Weaknesses | High dependency on a single supplier (NVIDIA) for critical AI hardware, creating supply chain and pricing risk. | Astronomical and accelerating capital expenditure (up to $135 B in 2026) deepens financial dependency on a single vendor for frontier AI. | The dependency has become more entrenched and financially significant, despite parallel efforts to develop in-house chips (MTIA). |

| Opportunities | Leverage massive GPU clusters to train state-of-the-art foundation models like the Llama series and gain a competitive edge in AI research. | Utilize the full-stack platform to pursue “superintelligence, ” enable new user-facing features (e.g., in Whats App), and allow NVIDIA to enter the data center CPU market. | The scope of opportunity expanded from internal R&D to full-scale business integration and the creation of new market fronts for NVIDIA. |

| Threats | Supply constraints for high-demand chips like the H 100 and intense competition from other hyperscalers (Microsoft, Google) for the same limited resources. | Increased competition from AMD (MI 300 X), massive investments by rivals (Open AI‘s 10 GW plan), and Meta‘s own long-term push for silicon independence with its MTIA program. | The competitive threat has broadened from rival GPUs to entire platforms and the strategic risk posed by customers’ in-house silicon ambitions. |

Forward Outlook: Will Supply Chain Constraints Define the 2026 AI Race?

The most critical factor shaping the AI landscape in the year ahead is whether the global hardware supply chain, centered on NVIDIA and its manufacturing partners in Taiwan, can meet the unprecedented, multi-hundred-billion-dollar demand from Meta and its competitors.

If NVIDIA and its partners cannot scale manufacturing to satisfy the voracious appetite of Meta, Open AI, and other hyperscalers, watch for an increase in project delays, a surge of investment into alternative platforms like AMD‘s, and an acceleration of in-house silicon development. These could be happening: the AI industry’s growth may become constrained not by capital or innovation, but by the physical limits of hardware production.

- Signal 1: Unprecedented Capital Expenditure. Meta‘s planned $115 billion to $135 billion capex for 2026, combined with Open AI‘s planned 10 GW deployment, are concrete signals of a demand wave that far exceeds historical norms and will place immense pressure on the supply chain.

- Signal 2: Strategic Supply Consolidation. Meta‘s decision to lock in a multi-year, full-stack deal with NVIDIA is a clear strategic move to secure its position at the front of the supply line, indicating an anticipation of severe, industry-wide hardware shortages.

- Signal 3: Market Sensitivity to Supply. The market’s negative reaction to AMD stock following the Meta–NVIDIA deal underscores the perception that access to NVIDIA‘s supply is the primary gatekeeper for frontier AI development, making any threat of being locked out a major strategic risk.